ISO/IEC 42001 is an international standard that specifies requirements for establishing, implementing, maintaining, and continually improving an Artificial Intelligence Management System (AIMS) within organizations. For enterprise sellers deploying AI-powered solutions—whether generative AI platforms, machine learning models, or automated decision systems—this standard represents a fundamental shift from aspirational ethics statements to auditable governance frameworks. The standard aims to identify and treat potential risks associated with AI, such as biases, security vulnerabilities, and data privacy issues.

Most organizations implementing AI treat compliance as an afterthought rather than an operational discipline. This creates a gap between demonstrating AI capability and proving responsible AI governance—a gap that becomes apparent when enterprise buyers conduct vendor security reviews, when regulators demand transparency into algorithmic decision-making, or when incidents expose inadequate controls over model behavior. Since its introduction in December 2023, this international standard has provided valuable guidance for responsible AI systems. This glossary entry explains how ISO/IEC 42001 functions as a management system standard, its relationship to data protection frameworks, and why enterprise organizations with AI-powered offerings require this level of structured governance.

What Is ISO/IEC 42001?

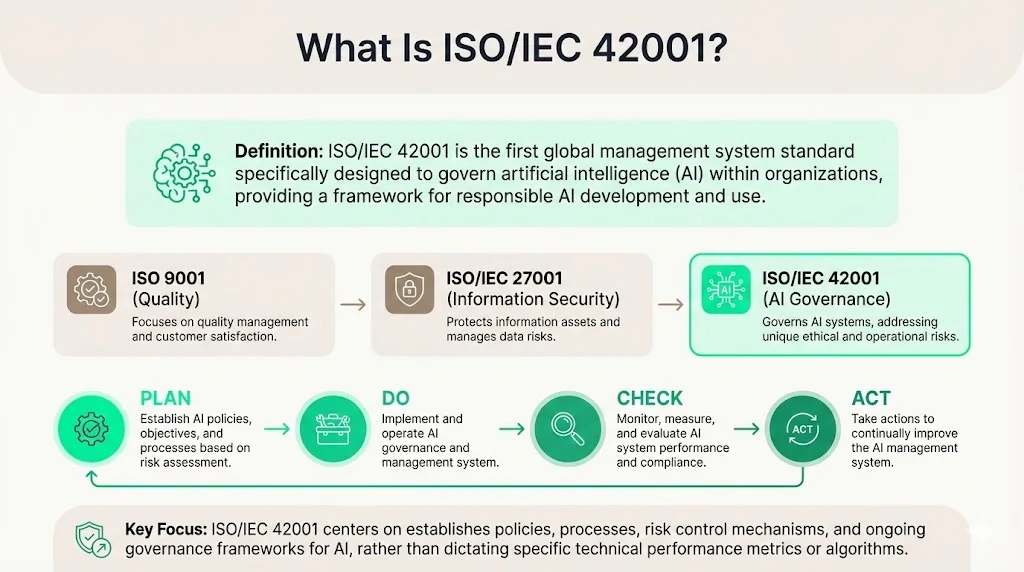

An AI management system, as specified in ISO/IEC 42001, is a set of interrelated or interacting elements of an organization intended to establish policies and objectives, as well as processes to achieve those objectives, in relation to the responsible development, provision or use of AI systems. Unlike technical standards that address specific AI capabilities or performance metrics, ISO/IEC 42001 provides a management system framework comparable to ISO 9001 for quality management or ISO/IEC 27001 for information security management.

ISO/IEC 42001 is a management system standard (MSS). Implementing this standard means putting in place policies and procedures for the sound governance of an organization in relation to AI, using the Plan-Do-Check-Act methodology. This approach ensures that AI governance becomes an operational discipline embedded throughout the organization rather than a project-based initiative conducted annually before audits. Rather than looking at the details of specific AI applications, it provides a practical way of managing AI-related risks and opportunities across an organization.

Where ISO/IEC 42001 Fits in the Compliance Universe

ISO/IEC 42001 occupies a distinct position within the broader compliance landscape. While ISO/IEC 27001 addresses information security management—ensuring confidentiality, integrity, and availability of data and systems—it does not comprehensively address AI-specific risks such as model drift, algorithmic bias, or explainability requirements. Key requirements include risk management, AI system impact assessment, system lifecycle management and third-party supplier oversight.

Organizations operating under ISO/IEC 27001 certification will find ISO/IEC 42001 complementary rather than duplicative. The information security controls established under 27001 provide foundational protections for AI systems, while 42001 extends governance to address the unique operational, ethical, and regulatory challenges introduced by machine learning models and automated decision-making systems. ISO/IEC 42001 integrates with existing security and compliance frameworks. This compatibility enables organizations to build AI compliance strategies on top of existing governance structures.

ISO/IEC 42001 also aligns with emerging AI-specific regulatory frameworks. The EU AI Act, for example, establishes risk-based requirements for AI systems operating within European markets. Organizations implementing ISO/IEC 42001 establish the governance infrastructure, documentation practices, and risk management processes that demonstrate alignment with these regulatory requirements—without requiring separate compliance programs for each jurisdiction.

Key Components of ISO/IEC 42001

1) Governance and Leadership

Top management must exhibit leadership, integrate AI requirements with business processes and promote a culture that supports responsible AI usage. This requirement moves AI governance from technical teams into executive accountability. Organizations must establish clear roles defining who owns AI risk decisions, who approves model deployments, and who maintains oversight of AI system performance throughout operational lifecycles.

ISO 42001 requires an organization to have leadership support and sufficient resources to operate effectively over time. This means AI governance cannot function as an underfunded side responsibility—it requires dedicated resources, defined authority, and integration with existing risk management and compliance functions. For enterprise sellers, this translates to steering committees with representation from legal, security, engineering, and business leadership, ensuring AI deployment decisions account for technical capability, regulatory exposure, and customer expectations.

2) Risk Management Frameworks

ISO/IEC 42001 provides an integrated approach to managing AI projects, from risk assessment to effective treatment of these risks. Risk management under this standard extends beyond traditional information security threats to encompass AI-specific concerns: training data quality and provenance, model performance degradation over time, unintended bias in automated decisions, explainability requirements for regulated use cases, and third-party AI component dependencies.

Using the lifecycle stages defined in ISO/IEC 22989:2022, you can map AI risks identified during the threat hunting process to the corresponding ISO/IEC 42001:2023 clauses and Annex A controls. This mapping helps you align your AI development and governance efforts with a standards-based risk framework. Organizations implementing ISO/IEC 42001 conduct risk assessments at each lifecycle stage—from model inception and data collection through deployment, monitoring, and eventual decommissioning—ensuring that risk treatment remains proportionate to system criticality and regulatory exposure.

3) Impact Assessments

The standard mandates conducting AI risk assessments and impact evaluations. AI Impact Assessments (AIIAs) function analogously to Data Protection Impact Assessments (DPIAs) required under GDPR—they identify potential adverse effects of AI system deployment on individuals, organizations, or society before systems reach production environments. These assessments evaluate privacy implications when AI processes personal data, fairness concerns when models make consequential decisions affecting individuals, and transparency requirements when stakeholders need to understand automated decision logic.

AIIAs and threat modeling should be conducted at least annually on existing systems, and prior to the deployment of any new AI function. For enterprise sellers, this means establishing processes that prevent model deployments without documented impact assessments—a control that becomes essential when responding to customer security questionnaires or demonstrating due diligence during regulatory inquiries.

4) Documentation and Audit Readiness

The standard specifies a range of policy requirements, including a comprehensive AI policy, guidelines for AI use in products, appropriate use and others. Documentation requirements under ISO/IEC 42001 include AI system inventories identifying all models in development and production, data lineage records tracking training data sources and preprocessing decisions, model cards documenting intended use cases and known limitations, change logs recording model updates and performance monitoring results, and incident response records detailing AI system failures and remediation actions.

This documentation serves multiple functions: it provides audit evidence demonstrating control implementation, supports incident investigation when AI systems behave unexpectedly, enables knowledge transfer when personnel change, and supplies the transparency artifacts increasingly required by enterprise buyers and regulators. Organizations treating documentation as compliance theater—generating artifacts to satisfy auditors without implementing actual controls—face exposure when incidents reveal the gap between documented policies and operational reality.

How ISO/IEC 42001 Supports Data Protection Standards

1) Alignment with Privacy Laws and Secure Handling

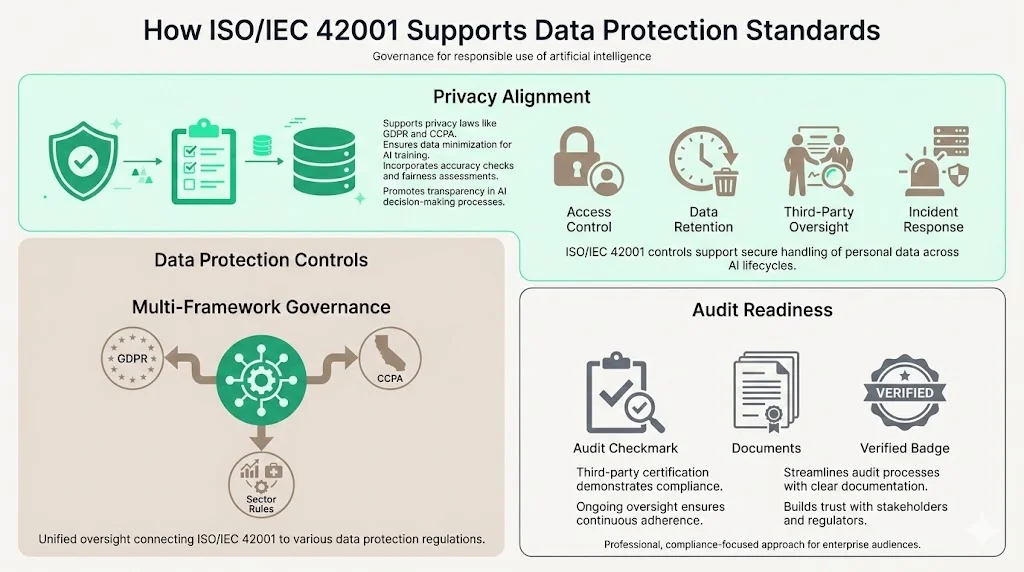

ISO/IEC 42001 reinforces core data protection principles established under privacy laws like GDPR, CCPA, and equivalent frameworks. Data minimization—collecting only data necessary for specified AI purposes—becomes operationalized through documented data collection policies and regular reviews of training datasets. Accuracy requirements translate to data quality controls, validation procedures, and monitoring for dataset drift that could degrade model performance or introduce bias.

Transparency obligations under privacy laws require organizations to explain automated decision-making logic to affected individuals. ISO/IEC 42001 ensures that AI systems are developed and operated ethically, transparently, and in alignment with regulatory standards. Organizations implementing ISO/IEC 42001 establish documentation practices that enable meaningful explanations—not generic statements about "using AI"—when individuals exercise rights to understand decisions affecting them.

2) Controls That Intersect with Data Protection

ISO 42001 includes 38 distinct controls that organizations will need to comply with during assessment. Several of these controls directly support data protection obligations. Access control requirements ensure that only authorized personnel can access training data containing personal information. Data lifecycle management controls govern retention periods, ensuring AI systems do not retain personal data longer than necessary for specified purposes. Third-party management controls address data processor relationships when organizations use external AI services or share data with model training vendors.

Incident response controls establish procedures for detecting and responding to data breaches involving AI systems—whether unauthorized access to training data, model extraction attacks, or privacy violations through unintended data exposure in model outputs. For organizations subject to breach notification requirements under GDPR, CCPA, or sector-specific regulations, these controls provide the detection and response capabilities necessary to meet notification timeframes.

3) Integrating with GDPR, CCPA, and Other Frameworks

Organizations operating across multiple jurisdictions face overlapping but not identical privacy requirements. GDPR emphasizes lawful basis for processing and data subject rights. CCPA focuses on consumer disclosure and opt-out rights. Sector-specific frameworks like HIPAA impose additional safeguards for protected health information. ISO/IEC 42001 provides a governance structure that accommodates these varying requirements without requiring separate compliance programs for each framework.

The AI management system established under ISO/IEC 42001 incorporates privacy requirements into AI lifecycle processes—ensuring that legal review occurs before model deployment, that privacy impact assessments address jurisdiction-specific requirements, and that data handling practices align with applicable legal frameworks. This integrated approach prevents the compliance fragmentation that occurs when organizations treat each privacy law as an isolated requirement rather than overlapping obligations requiring consistent governance.

4) Supporting Enterprise Audit Readiness

Compliance with the standard helps organizations build robust processes for the continuous monitoring and improvement of their AI systems, fostering trust and confidence among stakeholders. Enterprise customers conducting vendor assessments increasingly request evidence of AI governance—not aspirational commitments to responsible AI, but documented policies, implemented controls, and audit evidence demonstrating sustained compliance. ISO/IEC 42001 certification provides this evidence through independent third-party verification.

Organizations pursuing certification undergo audits examining governance structures, risk management processes, documentation practices, and operational controls. Achieving ISO/IEC 42001 certification means that an independent third party has validated that an organization is taking proactive steps to manage risks and opportunities associated with AI development, deployment, and operation. For enterprise sellers, this certification reduces the burden of responding to repetitive security questionnaires—customers can reference the certification scope and audit reports rather than requiring custom assessments for each engagement.

Machine Learning Governance and Accountability

Why Enterprise Clients Need Governance Beyond Traditional IT

Traditional IT governance addresses infrastructure availability, application security, and data protection. Machine learning introduces risk categories that existing governance frameworks do not comprehensively address. Model performance degradation occurs when production data distributions differ from training data—a phenomenon requiring ongoing monitoring rather than point-in-time security assessments. Algorithmic bias emerges from training data that reflects historical inequities or unrepresentative samples—requiring fairness evaluations beyond traditional security testing.

With increasing regulatory scrutiny, businesses need to proactively manage AI risks, including bias, data security and accountability. Enterprise clients purchasing AI-powered solutions face potential liability when vendor models produce discriminatory outcomes, expose confidential information, or make erroneous decisions affecting customers. ISO/IEC 42001 governance ensures that vendors implement controls addressing these AI-specific risks rather than relying on general IT security measures inadequate for machine learning systems.

Model Accountability and Transparency

The standard provides guidance to organizations that design, develop, and deploy AI systems on factors such as transparency, accountability, bias identification and mitigation, safety, and privacy. Model accountability requires establishing clear ownership for AI system outcomes—defining who bears responsibility when models behave unexpectedly, who approves changes to production models, and who maintains oversight of model performance metrics.

Traceability becomes essential for accountability. Organizations must document which training data produced which model version, which validation tests that version passed, which approvals authorized deployment, and which monitoring detected subsequent performance issues. This data lineage enables root cause analysis when incidents occur and demonstrates due diligence during regulatory inquiries or litigation. For enterprise sellers, traceability documentation answers customer questions about model provenance and provides audit evidence supporting vendor assurances about AI system reliability.

Ethical Considerations and Bias Mitigation

ISO/IEC 42001 requires implementing AI governance policies, ensuring responsible practices like fairness, explainability and data transparency. Bias mitigation extends beyond technical debiasing techniques to encompass governance processes: diverse review teams evaluating model outputs for unintended bias, fairness metrics appropriate to specific use cases, and escalation procedures when bias concerns emerge during development or deployment.

ISO/IEC 42001 does not prescribe specific technical approaches to fairness—recognizing that appropriate fairness definitions vary by context and application. Instead, it requires organizations to establish governance processes ensuring that fairness considerations receive explicit attention throughout AI lifecycles, that stakeholders with relevant expertise participate in fairness evaluations, and that organizations document the fairness tradeoffs inherent in their model design choices. This governance-focused approach acknowledges that bias mitigation requires human judgment informed by domain expertise, not merely algorithmic adjustments.

Implementing ISO/IEC 42001 in Your Organization

Steps to Adoption

Organizations pursuing ISO/IEC 42001 implementation begin with gap analysis—comparing current AI governance practices against standard requirements to identify control deficiencies. This includes identifying where AI is being used across the business—internal tools, customer-facing models, and third-party AI integrations. Many organizations discover AI deployments unknown to central governance teams, highlighting the need for comprehensive AI system inventories before establishing management controls.

Policy development follows gap analysis. Organizations establish the AI governance policies, risk management procedures, and lifecycle documentation standards required by the standard. Training ensures that personnel understand their roles within the AI management system—developers learn documentation requirements, product managers understand impact assessment procedures, and executives recognize their governance responsibilities. Tooling investments support policy implementation: tracking systems for AI risk registers, documentation repositories for model cards and data lineage, and monitoring platforms for production model performance.

The implementation roadmap typically spans 4-6 months for organizations with existing information security management systems and mature software development practices. Organizations starting from a minimal governance baseline require extended timelines—9-12 months—to establish foundational controls before pursuing certification. For enterprise sellers, this timeline planning must account for resource allocation across compliance, engineering, and business functions, recognizing that ISO/IEC 42001 implementation requires cross-functional collaboration rather than isolated compliance team effort.

Organizational Controls and Roles

ISO/IEC 42001 promotes responsible AI by involving compliance teams, AI developers and risk management professionals in decision-making processes. Organizations establish AI governance steering committees with defined decision authority over model approvals, risk acceptance decisions, and policy exceptions. Dedicated roles include AI risk managers responsible for maintaining risk registers and coordinating impact assessments, AI ethics officers providing guidance on fairness and bias concerns, and model owners accountable for specific AI system performance and compliance.

Clear ownership prevents the diffusion of responsibility that occurs when AI governance exists as shared responsibility without designated accountability. When model performance issues arise, when customers raise bias concerns, or when regulators request documentation, organizations need identified personnel with authority and resources to respond—not committee structures where accountability dissolves across multiple stakeholders.

Integrating with Existing Standards

Organizations already certified under ISO/IEC 27001 benefit from existing management system infrastructure. For AI and ML teams already operating within ISO/IEC 27001 or ISO 9001 environments, ISO/IEC 42001 integrates well as part of a broader integrated management system. Document control procedures, internal audit processes, management review meetings, and corrective action workflows established for information security management extend to AI governance with adaptations for AI-specific requirements.

Integration reduces compliance burden compared to standalone implementations. Organizations leverage existing governance forums to address AI matters, incorporate AI controls into established risk management frameworks, and utilize current documentation systems for AI-specific artifacts. This integrated approach also ensures consistency—preventing situations where AI governance operates under different standards than broader organizational risk management, creating gaps and conflicts between overlapping requirements.

Business Value for Enterprise Clients

Strengthen Trust in AI Systems

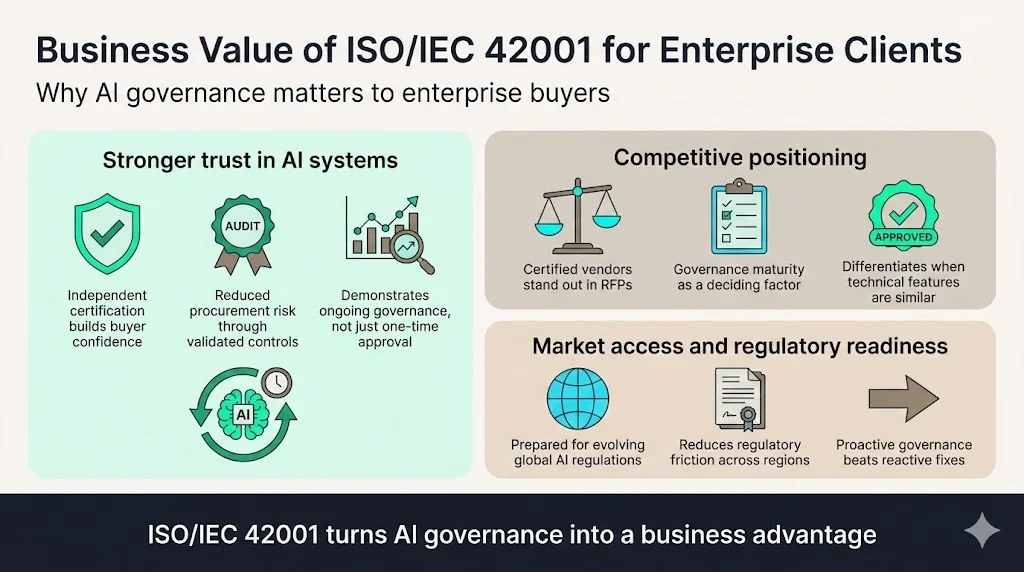

ISO/IEC 42001 helps organizations build trust, achieve AI compliance and align with international best practices. Enterprise buyers purchasing AI-powered solutions face increasing pressure from their own customers, regulators, and boards to demonstrate responsible AI practices. Vendor certification under ISO/IEC 42001 provides independent verification that reduces buyer risk—enabling procurement decisions supported by third-party audit evidence rather than vendor self-attestation.

Trust extends beyond initial purchase decisions to ongoing vendor relationships. Organizations deploying vendor AI systems into production environments require confidence that vendors maintain governance disciplines over time—that model updates undergo appropriate review, that performance monitoring detects issues before customer impact, and that incident response procedures address AI-specific failures. ISO/IEC 42001 certification with ongoing surveillance audits demonstrates this sustained governance commitment.

Competitive Positioning

Just like the EU AI Act, ISO/IEC 42001 covers all sectors and is of interest to governments, academia, and businesses involved in its development and deployment globally. Enterprise buyers—particularly in regulated industries like financial services, healthcare, and government—increasingly require AI governance evidence as procurement prerequisites. Vendors without demonstrated AI governance face disqualification during security reviews, regardless of technical capability.

ISO/IEC 42001 certification differentiates vendors in competitive evaluations. When enterprise buyers compare functionally similar AI solutions, governance maturity becomes a deciding factor. The vendor providing audit reports, control documentation, and independent certification evidence wins deals over competitors offering only assurances about responsible AI intentions. For enterprise sellers, this certification represents investment in competitive positioning that compounds over time as AI governance requirements intensify across industries and jurisdictions.

Better Market Access and Regulatory Readiness

ISO/IEC 42001 prepares organizations for future AI regulations by providing a comprehensive framework that emphasizes ethical and responsible AI use. By implementing ISO/IEC 42001, organizations establish policies and procedures that align with current regulatory requirements and anticipated future standards. Regulatory frameworks targeting AI systems continue evolving—the EU AI Act establishes comprehensive requirements for European markets, U.S. federal agencies issue sector-specific AI guidance, and jurisdictions worldwide develop AI-specific legislation.

Organizations implementing ISO/IEC 42001 establish governance infrastructure adaptable to emerging requirements rather than reactive compliance programs triggered by each new regulation. The risk management processes, impact assessment procedures, and documentation practices required by ISO/IEC 42001 provide foundations for demonstrating compliance with jurisdiction-specific AI laws as they take effect—reducing the scramble that occurs when organizations face new regulatory obligations without established governance disciplines.

Challenges and Practical Considerations

ISO/IEC 42001 implementation requires organizational change beyond deploying new tools or writing policies. Responsible AI requires involving compliance teams, AI developers and risk management professionals in decision-making processes. Engineering teams accustomed to rapid iteration cycles must adapt to governance processes requiring impact assessments before deployment. Product teams focused on feature velocity must incorporate time for risk reviews and documentation into release planning.

Cultural resistance emerges when personnel perceive governance as bureaucratic impediment rather than risk management discipline. Addressing this resistance requires leadership messaging emphasizing that ISO/IEC 42001 governance protects the organization from regulatory penalties, reputational damage, and customer attrition—not merely compliance overhead. Organizations succeed with implementation frame AI governance as enabling sustainable AI deployment rather than constraining innovation.

Resource allocation presents practical challenges. ISO/IEC 42001 implementation requires dedicated personnel time across multiple functions—compliance for policy development, engineering for control implementation, legal for regulatory alignment, and business leadership for governance oversight. Organizations underestimating this resource requirement face implementation delays when personnel juggle governance work alongside primary responsibilities. Realistic implementation planning accounts for sustained effort measured in hundreds of hours rather than isolated projects completed between other priorities.

Balancing innovation with governance requires calibrating control intensity to risk levels. Organizations deploying low-risk AI applications—internal productivity tools with minimal data exposure—can implement streamlined controls. Organizations deploying high-risk systems—automated lending decisions, medical diagnosis support, or content moderation at scale—require comprehensive governance commensurate with potential harm. ISO/IEC 42001 sets out a structured way to manage risks and opportunities associated with AI, balancing innovation with governance. Effective implementation applies proportionate controls rather than uniform bureaucracy regardless of risk context.

Conclusion

The ISO/IEC 42001 standard offers organizations the comprehensive guidance they need to use AI responsibly and effectively, even as the technology is rapidly evolving. For enterprise sellers deploying AI-powered solutions in 2026, this standard represents the transition from aspirational ethics frameworks to auditable management systems. Organizations implementing ISO/IEC 42001 establish governance structures addressing AI-specific risks—algorithmic bias, model drift, explainability requirements, and data protection obligations—through systematic risk management, impact assessments, and lifecycle controls.

The standard supports data protection compliance by operationalizing privacy principles throughout AI lifecycles, ensuring that legal requirements inform technical decisions rather than functioning as after-the-fact compliance checks. ISO/IEC 42001 certification provides enterprise buyers with independent verification of vendor AI governance, reducing procurement risk and enabling purchase decisions supported by audit evidence. As AI regulations intensify globally and enterprise customers demand demonstrable AI governance, organizations implementing this standard position themselves for sustained market access and competitive differentiation. Forward planning requires recognizing that AI governance matures through iterative implementation rather than one-time certification—organizations succeeding with ISO/IEC 42001 embed continuous improvement into operational disciplines, ensuring that governance evolves alongside AI capabilities and regulatory expectations.

FAQs

1) How do you manage machine learning systems responsibly?

Responsible machine learning management requires implementing governance practices throughout system lifecycles. Organizations conduct risk assessments identifying potential harms before model deployment, perform impact evaluations addressing fairness and privacy concerns, establish documentation standards capturing data lineage and model decisions, implement continuous monitoring detecting performance degradation or bias drift, and maintain incident response procedures addressing AI-specific failures. AI governance should be built into every process in the AI development and maintenance journey. These practices move beyond point-in-time compliance checks to sustained operational disciplines integrated with existing risk management and quality assurance functions.

2) What problems does ISO/IEC 42001 solve for enterprises?

ISO/IEC 42001 establishes a comprehensive framework for managing artificial intelligence technologies within an organization. The standard solves the problem of inconsistent AI governance across organizational units—preventing situations where different teams deploy AI systems under varying standards creating compliance gaps and risk exposure. It addresses the challenge of demonstrating AI governance to external stakeholders by providing certification evidence that satisfies customer security reviews and regulatory inquiries. ISO/IEC 42001 also solves the problem of reactive AI governance by establishing proactive risk management processes that identify and mitigate issues before deployment rather than responding to incidents after customer impact.

3) How does ISO/IEC 42001 support data protection obligations?

ISO/IEC 42001 ensures that policies, controls, and documentation align with privacy principles and legal requirements through integrated governance processes. The standard requires impact assessments evaluating privacy implications before AI system deployment, access controls restricting personal data to authorized personnel, data lifecycle management governing retention periods, and transparency documentation enabling meaningful explanations of automated decisions. An ISO/IEC 42001 certified AI management system strengthens controls over quality, security, and transparency of AI systems. These controls operationalize data protection requirements throughout AI lifecycles rather than treating privacy as isolated legal review conducted separately from technical development.

4) Who should adopt ISO/IEC 42001?

ISO/IEC 42001 is designed for entities providing or utilizing AI-based products or services, ensuring responsible development and use of AI systems. Organizations that develop AI models for commercial deployment, vendors providing AI-powered software or services to enterprise customers, enterprises deploying AI systems affecting employees or customers, and organizations operating in regulated industries where AI decisions create compliance obligations should adopt this standard. Organizations using third-party AI services—even without developing models internally—benefit from ISO/IEC 42001 implementation by establishing governance over AI procurement, vendor management, and integration with existing systems. The standard allows both large corporations and startups to effectively manage AI risks, comply with regulations, and build trust with stakeholders.

.svg)

.svg)

.svg)