Most enterprise buyers now request assurance artefacts before they sign a contract. They look for evidence that a provider can protect their data, not just promises. Yet many organisations treat classification as an afterthought. They stand up policies on paper, but when an auditor asks where sensitive data lives, how it is labelled and who can access it, they scramble. This ISO 27001 Data Classification Guide provides a clear pathway for security leaders who need more than a checkbox. It draws on international standards, recent breach statistics and patterns from Konfirmity’s 6,000‑plus audits to show why classification must anchor a modern information security management system (ISMS). As the global average cost of a breach fell to $4.44 million in 2025, businesses still face rising penalties and customer churn when they mishandle data. Proper classification links technical controls to real risk, accelerates deals, and reduces the time spent on audits.

What ISO 27001 says about data classification

Annex A control 5.12

ISO 27001:2022 sets out a single control for classification—Annex A 5.12. The standard states that information should be classed according to the organisation’s security needs based on confidentiality, integrity, availability and requirements from interested parties. In practice, this means you must identify what data you hold, assess how harmful a loss of confidentiality, integrity or availability would be, and then treat that data accordingly. ISO 27001 Data Classification Guide is more than a checklist: it is a risk‑driven process.

The accompanying standard, ISO 27002, provides more detail. It explains that classification serves two functions: it enables the organisation to determine the appropriate level of protection for each asset and it ensures resources are directed where they matter most. It also advises using terms that make sense to staff (public, internal, confidential) and documenting all processes in the Statement of Applicability (SoA). Classification is therefore not optional in an ISMS; it is a preventive measure built into Annex A and should be reflected in your policies, risk assessments, and audits.

Why classification underpins trust and compliance

Enterprise clients map data sensitivity directly to business risk. If your organisation processes personal health records, payment information or intellectual property, a breach can have a serious or even catastrophic effect. FIPS 199, a U.S. federal standard referenced by many auditors, defines low, moderate and high impact tiers for confidentiality, integrity and availability. A low impact loss might cause minor financial damage or inconvenience; a moderate impact breach could result in significant damage or legal exposure; and a high impact event could cause severe financial losses or endanger lives. Although ISO 27001 doesn’t use the same terminology, the logic is similar: you should align controls with the potential harm from losing confidentiality, integrity or availability.

Clients have a strong incentive to check that you have done this work. Regulators do too. Under the General Data Protection Regulation (GDPR) organisations can be fined up to 4 percent of global revenue or €20 million for failing to protect personal data. HIPAA imposes hefty penalties when protected health information (PHI) is mishandled. The U.S. Department of Health and Human Services advises healthcare providers to classify electronic PHI as sensitive, internal use or public, control access and train staff. In 2025 the cost of a breach in the U.S. reached $10.22 million, and healthcare organisations paid an average of $7.42 million. These figures reflect direct remediation costs as well as regulatory fines and lost clients. Classification helps avoid such outcomes by ensuring the right controls are applied before an incident occurs.

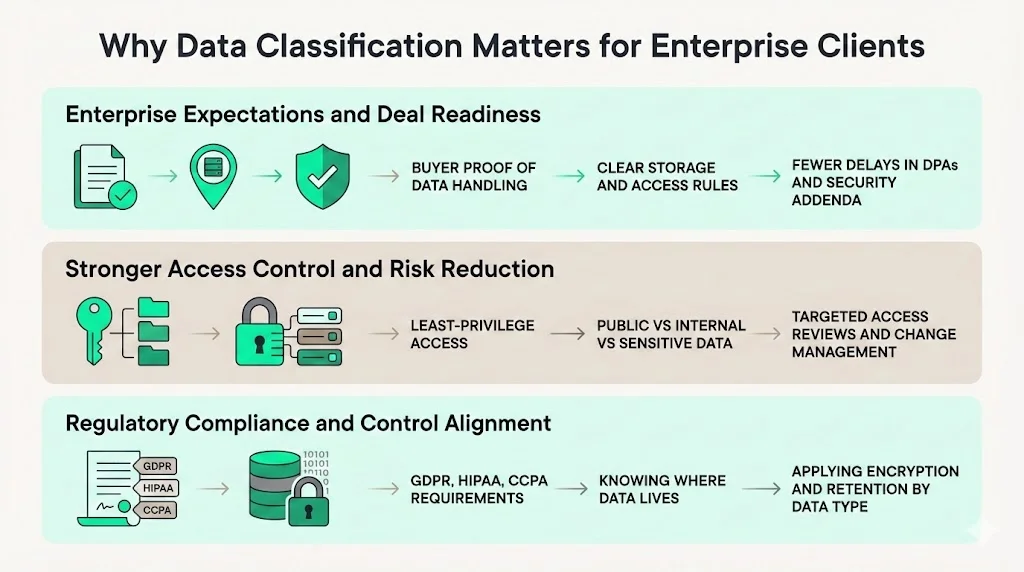

Why data classification matters for enterprise clients

Enterprise and healthcare buyers demand proof of operational security. They want to know where their data will be stored, who will see it and how it will be protected. Without clear classification, your teams risk granting broad access, using weak encryption, or storing sensitive records on unprotected servers. That not only increases breach risk; it also delays sales. Procurement questionnaires ask for data classification policies and evidence that those policies are enforced. When classification is vague or inconsistent, security addenda and data protection agreements (DPAs) can stall.

Data classification also strengthens access control. By tying permissions to categories, you implement the principle of least privilege and reduce the attack surface. Public information can be shared widely, but internal business data should not be exposed on public websites; sensitive client data must be kept in encrypted storage with restricted access. Classification informs these choices and ties them to business impact. As a result, your access reviews and change management become more efficient because you can target the areas that matter most.

Regulatory compliance is another driver. Many standards, from GDPR to the California Consumer Privacy Act (CCPA) and HIPAA, require that organisations know where personal data resides and protect it accordingly. A Palo Alto Networks article stresses that compliance with GDPR, HIPAA and CCPA depends on proper classification. After categorising data, organisations can apply controls like encryption, access controls and secure retention. Classification therefore acts as the bridge between legal requirements and technical controls. It also helps allocate resources: more costly controls such as hardware security modules or tokenisation can be reserved for high‑risk data sets.

Core concepts in ISO 27001 data classification

Information asset inventory

Classification begins with an inventory of assets. You cannot protect what you cannot see. State government guidance on classification emphasises that security categorisation should be integrated with business and IT management processes and feed into Business Impact Analyses (BIAs). It also points out that understanding which information types support which lines of business helps avoid under- or over‑protecting systems. In practice, building an asset register means listing systems, databases, SaaS applications, documents, backups and any other repository where business data resides. Each item should have an owner who is accountable for its classification and protection.

During Konfirmity engagements we see that this step is often rushed. Teams may document product databases but forget analytics logs or vendor integrations. To avoid blind spots, use tooling that scans cloud providers and file repositories for unknown assets. Supplement automated discovery with workshops where engineers and business unit leaders map out where client and customer data flows. Assign owners: for example, the head of finance owns the billing system, while the head of engineering owns the code repository. Ownership ensures someone is responsible for classifying and protecting each item.

Classification criteria

The primary basis for classification is confidentiality. Ask: if this information were disclosed, how harmful would that be? But confidentiality is not the only consideration. Integrity and availability matter too. FIPS 199 explains that impact tiers should be assigned for each objective. A file containing personal data may be subject to a high confidentiality impact, but its availability impact could be low if it is archival. Conversely, a configuration database may not contain personal data but might be essential to uptime; its availability impact could therefore be significant. Your criteria should capture business impact (financial loss, harm to customers, legal exposure) and regulatory impact (e.g., fines for mishandling personal or health information). Consider customer trust as well: losing design plans may not incur a statutory fine, but it could lead to lost revenue and reputational damage.

At Konfirmity we use a risk-based scoring model to map assets to categories. For each asset we score confidentiality, integrity and availability on a 1–5 scale and add legal/regulatory flags if the data contains protected information. We then assign a category (public, internal, confidential or restricted) based on the highest score. This simple matrix ensures consistency across teams and aligns with Annex A 5.12.

Roles and responsibilities

Who decides how a piece of data should be classed? In ISO 27001 the accountable party is the information owner. This may be the product manager for a software platform, the head of HR for employee data or the marketing lead for campaign analytics. Security teams facilitate the process, provide templates and handle disputes, but they do not own all data. Business unit leaders have the context to decide what constitutes a restricted dataset and what can be shared internally. Once categories are assigned, the security team designs controls and monitors compliance.

In larger enterprises we often adopt a RACI model—Responsible, Accountable, Consulted, Informed—to clarify roles. Data owners are responsible for classification and accountable for decisions. Security and compliance teams are consulted and handle implementation. End users are informed about classifications and handling rules. This structure prevents confusion, ensures accountability and satisfies auditors who need to see evidence of ownership.

Common classification schemes and categories

ISO 27001 does not prescribe specific labels. Organisations can adapt schemes to their context as long as they align with confidentiality, integrity and availability. A typical approach uses four tiers:

This scheme echoes the tiers used by Clemson University’s classification policy. The policy states that public data is intended for public disclosure; internal data is not generally public and its compromise would have minimal impact; confidential data must be protected because its loss could have adverse impacts; and restricted data is highly sensitive, governed by law and subject to significant penalties if breached. You can rename categories to suit your culture (e.g., “secret” instead of “restricted”), but keep the number of tiers manageable so staff can make consistent choices. Some organisations use five tiers (adding a fifth for archival or deprecated data), but four is often sufficient.

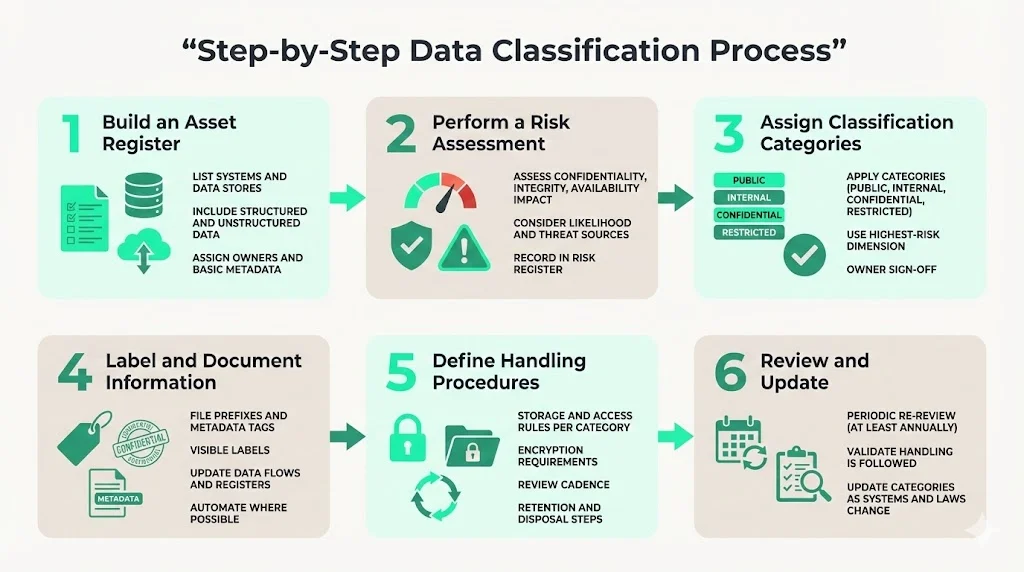

Step‑by‑step data classification process

Konfirmity’s methodology borrows from NIST, Secureframe and real-world audits. The process has six steps, each building on the last.

Step 1 — Build an asset register

List every system, database, service and document that stores or processes business data. Use automated discovery tools across cloud accounts, on‑premises servers and SaaS platforms to find unknown data stores. Include structured and unstructured data: code repositories, customer support transcripts, analytics logs, emails and backups. Assign an owner to each item and record metadata such as data type, primary user and regulatory relevance. As the Connecticut state guidance notes, this early categorisation feeds into business impact analyses, system design and contingency planning.

Step 2 — Perform a risk assessment

For each asset, evaluate the potential harm from losing confidentiality, integrity or availability. Use FIPS 199’s definitions of low, moderate and high impacts as a reference. Consider: would disclosure lead to regulatory fines? Could tampering cause financial fraud? Would downtime stop your product? Also assess the likelihood of exposure based on threat models (e.g., external hackers, malicious insiders, vendor compromise). The goal is to produce a risk profile that informs how strict your controls should be. Document this assessment and tie it back to your risk register and ISMS.

Step 3 — Assign classification categories

Using your defined scheme (public, internal, confidential, restricted), apply categories to each asset based on the highest risk dimension. If an asset scores high on confidentiality but low on availability, treat it as restricted. Document the rationale and have data owners sign off. This ensures accountability and simplifies audits. Remember that classification can change: a dataset may start as confidential but become public once anonymised. The Clemson policy notes that context matters and classifications can change over time.

Step 4 — Label and document information

Once categories are assigned, label data accordingly. This may involve adding prefixes to file names, metadata tags in document properties, watermarks on PDFs or classification fields in database schemas. The U.S. health sector’s classification poster emphasises the importance of labelling data so staff know how to handle it. Ensure labels are visible but not intrusive. Update documentation such as asset registers, data flow diagrams and procedure documents so that classification is clear. Automate labelling wherever possible through data loss prevention (DLP) tools and cloud tagging.

Step 5 — Define handling procedures

For each category, define how data should be stored, accessed, transmitted, retained and disposed of. For example, public data can live on public websites with no access controls. Internal data should be stored in systems requiring authentication and should not be shared outside the organisation. Confidential data might require encryption at rest and in transit, access review every quarter and logging of all access. Restricted data should only be accessible to a small set of individuals, use strong cryptographic controls and be removed when no longer needed. Palo Alto Networks notes that classification informs decisions about encryption, backup and access control. Document these procedures and train staff. Integrate them into your development pipelines, backup strategies and vendor selection criteria.

Step 6 — Review and update

Classification is not a one‑time exercise. Systems evolve, new regulations are enacted and business priorities shift. Schedule periodic reviews—at least annually, but more often for high‑risk data. During these reviews, re‑assess the risk profile, verify that handling procedures are followed and update categories if necessary. In Konfirmity’s experience, quarterly reviews tie in well with access reviews and change management cycles. Use feedback from audits and incidents to refine categories and controls.

Integrating classification with controls

Classification is only useful if it influences controls. Start with access management. Use your categories to drive role‑based access control (RBAC) policies: public data can be read by anyone; internal data is available to staff; confidential and restricted data require specific roles and just‑in‑time access. Keep an eye on access drift and enforce periodic access reviews. Tie encryption practices to categories: store confidential data in encrypted databases, use hardened key management systems for restricted data, and require full-disk encryption for laptops handling sensitive information. Map backup retention periods to categories: public data may be retained indefinitely; restricted data should be removed as soon as legal requirements allow.

Classification should also feed into incident response and monitoring. High‑risk data deserves enhanced logging and alerting. When a high‑risk asset is accessed outside usual hours or by an unusual user, triggers should fire. Your vulnerability management processes can prioritise remediation on systems holding restricted data. And because classification is part of the Statement of Applicability, it must be reflected in internal audits. Auditors will want to see evidence that classification decisions led to specific control implementations.

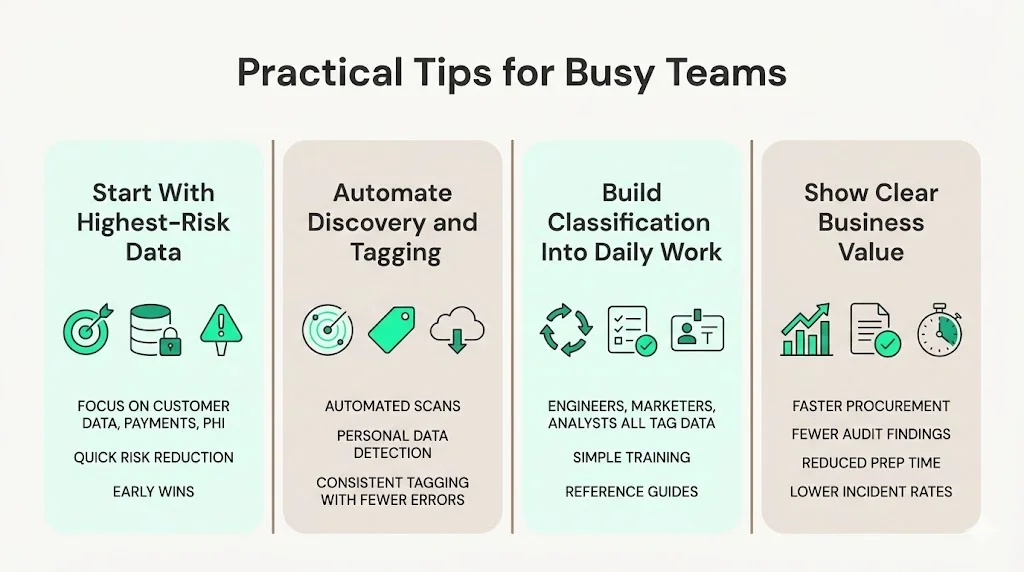

Practical tips for busy teams

- Start where the risk is greatest. Instead of trying to classify every file in your environment, begin with systems that hold customer data, payment details or PHI. This ensures quick risk reduction and early wins.

- Use automation for discovery and tagging. Cloud providers and DLP tools can scan storage buckets, databases and SaaS applications, identify personal data and attach tags. This reduces human error and accelerates classification.

- Train staff and integrate classification into workflows. Classification fails when employees think it’s someone else’s job. Make it part of daily work: engineers tagging new tables, marketers labelling new documents and data analysts verifying categories during builds. Use training sessions and reference cards. The HHS poster emphasises the need to train employees on classification.

- Show business value. Classification should not be a compliance tax; it should reduce incidents, speed up procurement and demonstrate maturity to clients. Use metrics: decreased time to onboard new clients, fewer audit findings and lower incident rates. In Konfirmity’s programs, organisations often cut audit preparation time by 75 percent and reduce findings by half because classification streamlines control design.

Measuring success

- Coverage and accuracy. Track the percentage of assets that have been classified and the proportion with accurate categories (verified via sampling or automated scanning). Aim for full coverage of critical systems and high accuracy for high‑risk data.

- Policy compliance. Monitor whether handling procedures are followed. This includes checking encryption, access controls and retention against category requirements. DLP tools can help identify violations, such as confidential data being emailed to external addresses.

- Audit readiness and evidence. Internal audit teams should test classifications and associated controls. External auditors will ask for evidence that categories are applied consistently and that controls match the categories. Prepare by maintaining up‑to‑date registers, documented decisions and proof of enforcement. A strong classification programme shortens audit cycles because evidence is readily available.

- Feedback and continuous improvement. Use incident post‑mortems, penetration tests and vulnerability management reports to update classification schemes. If a near miss reveals that an asset was more sensitive than thought, adjust its category. If staff find the scheme confusing, simplify it. Classification is living documentation.

Conclusion

In the era of procurement questionnaires, data protection agreements and increasing regulatory scrutiny, classification is no longer a nice‑to‑have. It is a core part of modern security practice. Without it, you cannot assign controls, you cannot show auditors that you are protecting the right data, and you risk paying millions in breach costs. This ISO 27001 Data Classification Guide has shown how to build a practical programme: start with an asset inventory, use risk‑based criteria, involve the right people, apply a simple scheme and integrate classification into your controls. As a human‑led managed service, Konfirmity implements these steps inside your stack, manages them year‑round and provides continuous evidence. We do not merely deliver a policy—we operate the controls every day. Security that looks good in documents but fails under incident pressure is a liability. Build the programme once, run it continuously, and let compliance follow.

FAQ

1. What is ISO 27001 data classification?

It is the process of categorising information assets according to confidentiality, integrity and availability needs, based on Annex A 5.12. The goal is to assign the right protection to the right data.

2. How many categories should a company use?

ISO 27001 doesn’t specify a number; most organisations choose three or four tiers (e.g., public, internal, confidential, restricted) as described in Clemson’s policy. Keep the scheme simple so staff can apply it consistently.

3. Is classification mandatory for ISO 27001 certification?

Yes, Annex A 5.12 requires information to be classed based on security needs. Auditors will expect to see a documented policy and evidence of application.

4. Who is responsible for classifying information?

Data owners—business unit leaders or product managers—are accountable. Security teams provide frameworks and support but do not own all data. A RACI model can clarify roles.

5. What’s the difference between classification and labelling?

Classification is the decision about which category an asset falls into, while labelling is the act of marking the asset (metadata, tags, watermarks) so people know its category. Labelling communicates handling rules.

6. How often should categories be reviewed?

At least annually or when there are major changes such as new systems, regulations or incidents. High‑risk data may warrant quarterly review, as advised by Secureframe.

7. How does classification help with risk assessment?

It translates abstract risk scores into concrete handling rules. By assigning assets to categories based on confidentiality, integrity and availability, you can prioritise controls and remediation efforts.

8. Can the same data have different categories in different contexts?

Yes. A dataset may be confidential internally but could become public once anonymised. Context and aggregation matter. Classifications can change over time.

9. What are common mistakes in implementing classification?

Rushing the asset inventory, defining too many categories, failing to train staff and not integrating classification with controls. These pitfalls lead to inconsistent application and audit gaps.

10. How does classification support compliance and audits?

It provides the foundation for demonstrating that controls match risks. Regulators like GDPR and HIPAA require knowledge of data location and sensitivity. Auditors look for evidence that data with higher risk receives stronger protections.

.svg)

.svg)

.svg)