Most enterprise buyers now request assurance artifacts before procurement. In the healthcare sector this is even more true because any software that touches patient data must satisfy strict privacy requirements. HIPAA Secure SDLC work has moved from a checkbox to an operational necessity. Healthcare breaches continue to rise; 2023 set a record with 168 million patient records exposed and hacking accounted for nearly 80% of breaches. In 2025, the average cost of a healthcare breach reached US$ 7.42 million, the highest among industries, and the time to contain such incidents averaged 279 days. With regulators cracking down and buyers demanding evidence, building security into your lifecycle isn’t optional.

This article gives a working plan to develop software that handles protected health information (PHI) responsibly. We explain why HIPAA compliance matters, outline what “HIPAA‑secure” means, contrast a standard software life cycle with a secure one, and provide a six‑phase framework that embeds privacy, encryption, access control, risk management and incident response into everyday engineering. Real scenarios, sample templates and metrics show how to make this framework part of day‑to‑day work. By following this guide, you can integrate privacy requirements, encryption and access controls from the start, reduce costs, and build applications that win trust.

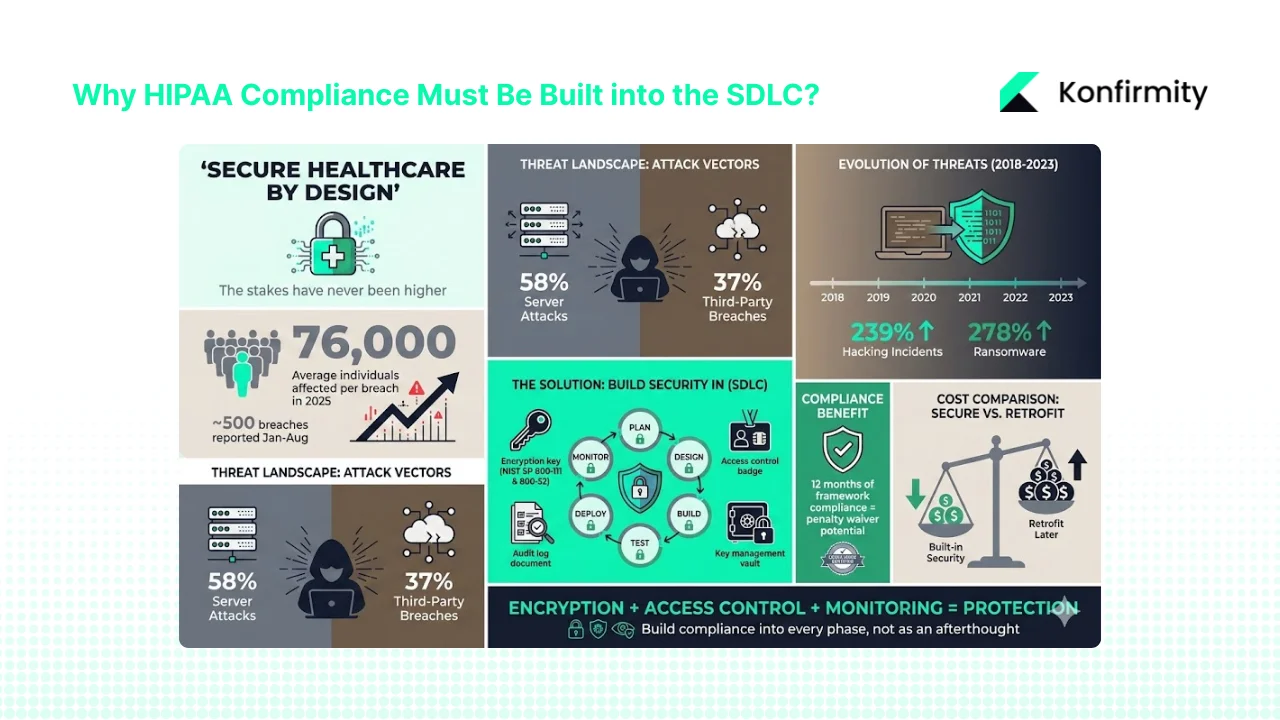

Why HIPAA Compliance Must Be Built into the SDLC?

Patient data is among the most valuable and sensitive data sets. Attackers pay handsomely for an electronic health record and the consequences of exposure are severe. In 2025 the average breach affected 76,000 individuals per incident and nearly 500 breaches of unsecured PHI were reported to HHS between January and August. Hackers go after network servers and business associates; 58% of reported breaches involved servers and 37% involved third parties. Fines and corrective action plans from the Office for Civil Rights can be debilitating, and reputation damage hurts revenue and partnerships. Covered entities and business associates must protect data in transit and at rest, enforce least‑privilege access, monitor usage and respond quickly when something goes wrong.

Common risks and why encryption and access control matter

Healthcare breaches have shifted from lost laptops to sophisticated hacking. Between 2018 and 2023 there was a 239% increase in hacking‑related incidents and a 278% increase in ransomware. The HIPAA Journal notes that widespread adoption of data encryption has reduced breaches caused by lost devices. The 2021 amendment to the HITECH Act also allows regulators to waive penalties when a covered entity can show 12 months of compliance with a recognised security framework. Encryption that follows NIST SP 800‑111 for data at rest and SP 800‑52 for data in transit helps satisfy this requirement. Risk also comes from inside: improper access, inadequate audit logs and weak key management all leave patient records exposed. Building controls early is cheaper than retrofitting later.

A practical agenda:

Software teams already operate under tight deadlines. This guide is designed for them. It shows how to weave compliance into existing workflows without endless bureaucracy. We share patterns learned across more than 6,000 audits over 25 years of combined experience at Konfirmity. The focus is on real control implementation, not tick‑boxes. We’ll demonstrate how strong encryption, well‑designed access policies, systematic risk assessments and continuous monitoring combine to make a HIPAA Secure SDLC routine.

CTA: Book a demo

What “HIPAA‑secure” Means in Practice

Protected health information (PHI) and ePHI

PHI includes any information that can identify an individual and relates to physical or mental health, care provided or payment for care. The HIPAA Privacy Rule protects PHI in any form—electronic, paper or verbal. Electronic PHI (ePHI) covers data stored in databases, files, mobile devices, email or transmission channels. Under the Security Rule, regulated entities must develop policies to ensure the confidentiality, integrity and availability of all ePHI they create, receive or transmit.

The five main HIPAA rules and how they map to software practices

- Privacy Rule – sets national standards for how PHI can be used and disclosed. Patients have the right to access and request corrections to their records. Software must support access logging, consent management and data minimisation.

- Security Rule – covers administrative, physical and technical safeguards. Teams must develop policies, ensure confidentiality and integrity of ePHI, identify threats, and modify measures as risks change. Technical safeguards include unique user identifiers, access controls, audit logging and encryption.

- Breach Notification Rule – requires notification to patients, HHS and sometimes the media when PHI is exposed. Notifications must be sent without unreasonable delay and no later than 60 days. Business associates must also notify covered entities of their own breaches.

- Enforcement Rule – specifies penalties and the civil monetary penalty structure for violations. It expands breach reporting and applies to business associate agreements.

- Omnibus Rule – made business associates directly liable for compliance, strengthened limits on uses and disclosures and expanded individuals’ rights.

Mapping these rules to development practices means treating patient data as a controlled asset at every stage: classify data flows, use strong encryption in transit and at rest, enforce least‑privilege access, maintain audit logs, implement incident response plans and manage vendor contracts.

Why a Standard SDLC Isn’t Enough

A traditional software development life cycle moves from requirements gathering through design, coding, testing, deployment and maintenance. Security and privacy are often considered towards the end or addressed only during testing. In the healthcare context this reactive approach fails for three reasons:

- Delayed discovery of risks – security flaws discovered at testing or after deployment are expensive to fix and may already have exposed PHI. Early assessments catch design flaws when changes are cheap.

- Inadequate controls – without integrating access controls, encryption and audit mechanisms into the architecture, developers may build systems that cannot be retrofitted easily.

- Regulatory gaps – regulators expect evidence of ongoing risk management, training, vendor oversight and incident response. Standard life cycles seldom produce the level of documentation needed to satisfy auditors.

A HIPAA Secure SDLC shifts the focus left. Security and compliance are built into each phase, with checklists, templates and evidence collection aligned to HIPAA requirements. This approach reduces later costs, shortens audit timelines and strengthens buyer confidence.

Framework: A Six‑Phase HIPAA Secure SDLC for Healthcare Software

This framework is designed for teams of any size. Each phase includes important tasks, controls, deliverables and example questions you can use to assess readiness. Adjust the depth based on project complexity and risk profile.

Phase 1 – Planning & Requirements Gathering

Main tasks:

- Engage stakeholders to understand business objectives and workflows involving PHI.

- Map patient data flows: identify where data is collected, stored, transmitted and processed.

- Perform a regulatory gap analysis covering HIPAA, the HITECH Act, local regulations and contractual obligations (e.g., business associate agreements).

Security & compliance controls:

- Classify data by sensitivity: differentiate between PHI and non‑PHI.

- Define minimum necessary access: limit how much data each role needs to perform its tasks.

- Identify encryption needs and specify whether ePHI must be encrypted at rest and in transit. NIST SP 800‑66r2 highlights the importance of identifying systems that contain ePHI and ensuring access is limited to authorised users.

- Plan audit logging for all PHI access events and define incident response expectations.

Deliverables:

- Requirements specification: document functional requirements and privacy constraints.

- Data flow diagrams: illustrate PHI flows across modules, external systems and third parties.

- Risk register stub: initial list of identified risks with owners and planned mitigations.

Checklist examples:

- Does the system collect ePHI? If so, what fields and under what consent?

- Have minimum‑necessary rules been documented for each role?

- Is there a business associate agreement requirement?

Phase 2 – Design

Main tasks:

- Design system architecture, ensuring PHI storage and transmission paths are secure. Include logical segregation between development, test and production environments.

- Design user interfaces with privacy in mind (e.g., avoid over‑display of PHI, use session timeouts).

- Define the security architecture covering encryption, access control and audit log structure. NIST SP 800‑66r2 emphasises that access control must limit data availability to authorised users and that unique identifiers should trace actions to specific users.

Controls:

- Implement role‑based access control (RBAC) with least‑privilege enforcement. Determine whether identity‑based or location‑based controls are needed.

- Choose encryption mechanisms: symmetric or asymmetric keys for data at rest, TLS for data in transit. The HIPAA Journal recommends aligning encryption to NIST SP 800‑111 and SP 800‑52.

- Separate environments and enforce different access policies for development, testing and production.

- Design audit logging to capture who accessed PHI, when, from where and what was done. Unique user identifiers are required.

Deliverables:

- Architecture diagrams showing components, data flows and security controls.

- Security design document outlining encryption choices, access control model and audit log schema.

- Data classification matrix linking data types to protection measures.

- Template access control policy specifying roles, responsibilities and least‑privilege rules.

Checklist questions:

- Are all ePHI transmissions encrypted using approved algorithms?

- Is least‑privilege enforced in access control design?

- Will the audit log capture read and write events on PHI?

Phase 3 – Development & Coding

Main tasks:

- Follow secure coding practices: validate inputs, escape outputs, avoid SQL injection and cross‑site scripting, use parameterised queries.

- Use vetted cryptographic libraries instead of writing your own.

- Embed encryption and access controls into the code base; ensure secrets are stored in configuration management, not in code.

- Conduct peer reviews focusing on PHI handling and compliance with secure coding guidelines.

Controls:

- Train developers on HIPAA and security concepts. Provide materials on risk assessments and encryption.

- Use static and dynamic analysis tools to detect vulnerabilities and run them as part of the build pipeline.

- Perform code reviews with a PHI lens: check that logging does not leak sensitive data and that encryption is correctly implemented.

- Manage third‑party libraries: evaluate them for PHI compliance and keep them patched.

Deliverables:

- Secure coding checklist emphasising input validation, error handling, secure use of libraries, encryption and logging.

- Code review template with questions about PHI handling, encryption, access control and logging.

- Developer training log documenting attendance and understanding of security practices.

Checklist questions:

- Have secure coding guidelines been documented and disseminated to the team?

- Was every module containing PHI reviewed by at least one peer trained in security?

- Are all dependencies maintained and reviewed for vulnerabilities?

Phase 4 – Testing & Validation

Main tasks:

- Perform functional tests covering all requirements, including privacy workflows.

- Conduct security testing: penetration tests, vulnerability scanning, and verification of encryption and access controls. Ensure that penetration tests focus on PHI flows; the HIPAA Journal emphasises that encryption reduces the notifiable breach risk.

- Validate audit logging: confirm that log entries include user identifiers and appropriate context. NIST SP 800‑66r2 requires that system activity be traceable to individual users.

- Carry out privacy testing such as de‑identification or anonymisation where data is used for analytics.

- Conduct user acceptance testing using realistic PHI scenarios and ensure that the application enforces minimum necessary access and session timeouts.

Controls:

- Perform vulnerability assessments and record findings using a risk management framework. Prioritise remediation based on criticality.

- Confirm that encryption keys are correctly managed and rotated.

- Ensure incident response readiness: simulate incidents, test notification processes and confirm that breach notifications can be sent within regulatory timelines.

Deliverables:

- Test plan with functional, security and privacy test cases.

- Vulnerability scan report and remediation plan.

- Sign‑off checklist confirming that all controls operate as designed before deployment.

Checklist questions:

- Has a penetration test been performed on all PHI flows?

- Are encryption and decryption functioning, including failure paths?

- Do audit logs capture all accesses and modifications to PHI?

- Has every high‑risk vulnerability been addressed and validated?

Phase 5 – Deployment & Maintenance

Main tasks:

- Deploy the application to a hardened production environment. Use Infrastructure as Code (IaC) to ensure consistent configurations.

- Manage change requests through a formal process to prevent unauthorised modifications.

- Configure continuous monitoring and logging; integrate with security information and event management (SIEM) systems for real‑time detection.

- Rotate encryption keys and manage secrets using a secure vault.

- Apply patches promptly and schedule regular updates.

Controls:

- Enforce access controls in production. Only authorised personnel should have administrative access. NIST SP 800‑66r2 stresses that contracts with business associates must require implementation of administrative, physical and technical safeguards to protect ePHI.

- Enforce key lifecycle management: generate, rotate and revoke keys according to policy.

- Maintain audit logging in production and review logs regularly for anomalies.

- Activate incident response plans when suspicious activity is detected.

Deliverables:

- Deployment checklist verifying environment configuration, security settings and rollback plan.

- Production access policy describing roles and privileges.

- Incident response plan specifying triggers, roles, communication steps and remediation procedures.

- Maintenance schedule detailing patch cycles and periodic reviews.

Checklist questions:

- Is audit logging enabled in production and integrated with the monitoring platform?

- Are encryption keys rotated on a schedule and stored securely?

- Does the incident response plan assign clear responsibilities and include a communication template?

Phase 6 – Monitoring, Review & Continuous Improvement

Security isn’t a one‑time effort. Continual monitoring and improvement sustain compliance and reduce risk.

Main tasks:

- Monitor logs and security metrics continuously. Use dashboards to track authentication failures, privilege escalations, data access patterns and system health.

- Perform regular vulnerability scanning and update the risk register with newly discovered issues.

- Review access permissions periodically and revoke unnecessary privileges.

- Conduct audit log reviews to ensure logs are complete, tamper‑evident and retained according to policy.

- Update policies and procedures as regulations and threats evolve. For instance, keep track of HHS rule changes such as the proposed mandatory encryption of ePHI in transit and at rest.

Controls:

- Use automated tools for scanning and log analysis; manual review should supplement automation.

- Schedule quarterly or monthly access reviews and annual policy reviews.

- Document findings and corrective actions to demonstrate continuous improvement.

Deliverables:

- Security metrics dashboard for PHI monitoring.

- Vulnerability remediation backlog with priorities, owners and due dates.

- Audit log review summaries with observations and follow‑up actions.

- Policy update log tracking changes, rationale and approvals.

Checklist questions:

- Are access logs reviewed at least monthly and anomalies investigated?

- Is the vulnerability backlog managed and closed in a timely manner?

- Have security policies been reviewed within the last year and updated to reflect new guidance?

Practical Examples & Case Scenarios

To illustrate the framework, here are three scenarios drawn from real projects handled by Konfirmity’s managed service.

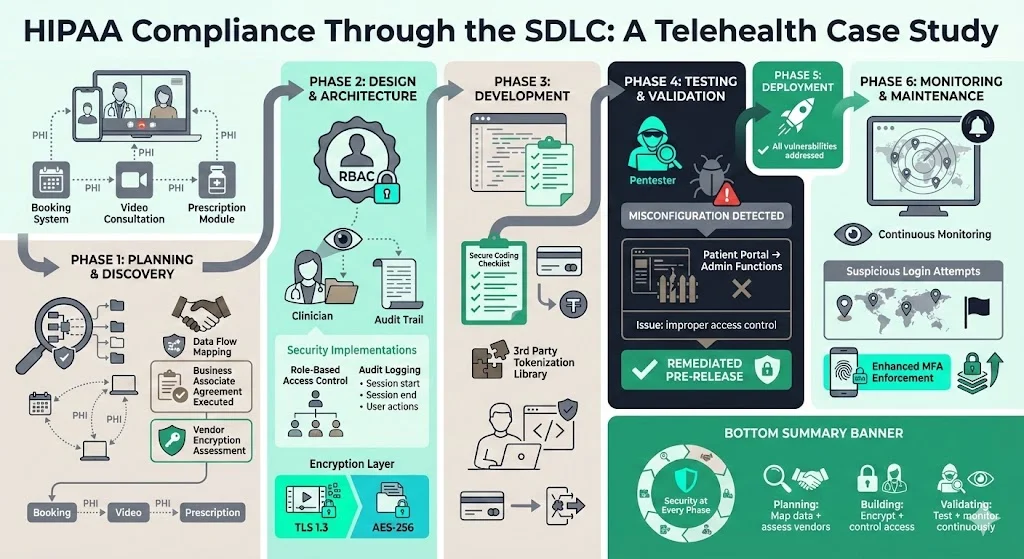

Scenario 1 – Telehealth Platform

A telehealth provider allows patients to book appointments, consult clinicians over video and review prescriptions. PHI flows across the booking system, video stream and prescription module. During Phase 1 the team mapped data flows and realised that the video service provider was a business associate. They executed a business associate agreement and assessed the vendor’s encryption capabilities. Phase 2 introduced RBAC limiting clinicians to viewing only their own patients, with audit logging capturing session start, end and actions. Encryption was enforced both for video streams (TLS 1.3) and for stored visit notes using AES‑256. During Phase 3 developers used a secure coding checklist and integrated a third‑party library that tokenises payment data. Pen tests in Phase 4 discovered a misconfigured access control that allowed administrative functions from the patient portal. The issue was remediated before release. Post‑deployment, continuous monitoring in Phase 6 flagged suspicious login attempts from unusual locations, leading to improved MFA enforcement.

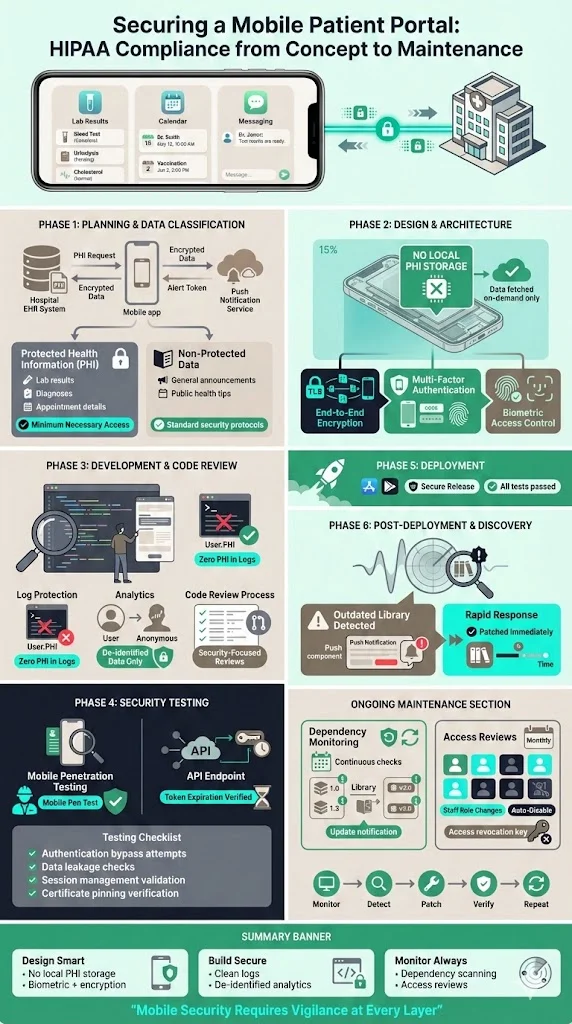

Scenario 2 – Mobile Patient Portal

A hospital built a mobile application to let patients view lab results, schedule visits and message care teams. Early planning identified PHI flows through the app, hospital EHR and push notification service. The team classified data into PHI (lab results, diagnoses) and non‑PHI (general announcements) and defined minimum necessary access. Design documents specified that lab results would never be stored locally on the device; they would be fetched on demand over an encrypted channel. The app used device authentication and biometric verification. In development, code reviews focused on ensuring no PHI persisted in logs and that de‑identification was used for analytics. Security testing included mobile penetration tests and verification that API tokens expired appropriately. After deployment, a vulnerability scan revealed an outdated library in the push notification component; the team patched it and added dependency monitoring to the maintenance plan. Monthly access reviews ensure staff accounts are disabled when roles change.

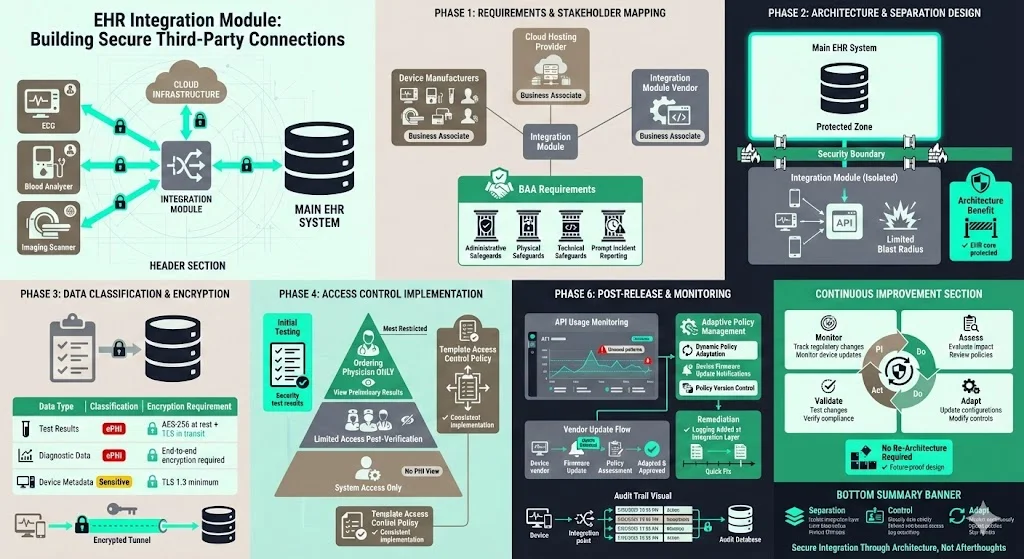

Scenario 3 – EHR Integration Module

A vendor developed a module that integrates third‑party diagnostic devices with an electronic health record. The module transmits test results (ePHI) over secure channels and stores them in the EHR. During requirements gathering, the team identified multiple business associates, including the device manufacturers and cloud hosting provider. Contracts required them to implement administrative, physical and technical safeguards and to report incidents promptly. The architecture separated the integration layer from the main EHR, limiting blast radius. A data classification matrix linked test results to strict encryption requirements. Developers used a template access control policy to implement role‑based restrictions; only the ordering physician could see preliminary results. Testing uncovered that audit logs did not record automated device uploads; developers added logging at the integration point. After release, the team monitored API usage and adapted policies as device vendors updated their firmware. A continuous improvement mindset allowed them to handle new regulatory changes without re‑architecting the module.

Templates & Artefacts for Busy Teams

To help teams move faster, here are templates and artefacts commonly used during HIPAA Secure SDLC projects. You can build your own versions using these as starting points:

- Requirements specification – document functional goals, stakeholders, PHI data flows, privacy requirements, user roles and regulatory references.

- Data classification matrix – categorise data types (PHI, non‑PHI, sensitive, internal), identify storage locations, assign encryption and access requirements, and map retention periods.

- Secure coding checklist – list security principles: input validation, output encoding, error handling, authentication and session management, encryption usage, logging and exception handling. Include PHI‑specific checks such as avoiding PHI in error messages.

- Access control policy – define roles, responsibilities and least‑privilege rules. Specify authentication mechanisms (unique IDs, MFA), session timeouts, password policies and account provisioning/deprovisioning.

- Test plans for PHI flows – include functional tests, negative tests, penetration tests, privacy tests (de‑identification), encryption verification and audit log validation.

- Incident response plan – outline roles and responsibilities (incident commander, technical lead, communications), triggers, triage process, notification procedure (patients, HHS, media), evidence collection and post‑incident review.

- Vulnerability assessment and remediation plan – establish a process for scanning, prioritising vulnerabilities (e.g., CVSS), setting service‑level agreements for remediation and tracking status.

- Audit log review procedure – define review frequency (monthly, quarterly), scope (critical systems, privileged access), person responsible and escalation process for anomalies.

Having these artefacts ready shortens onboarding for new projects and ensures that compliance and security requirements are considered from the start.

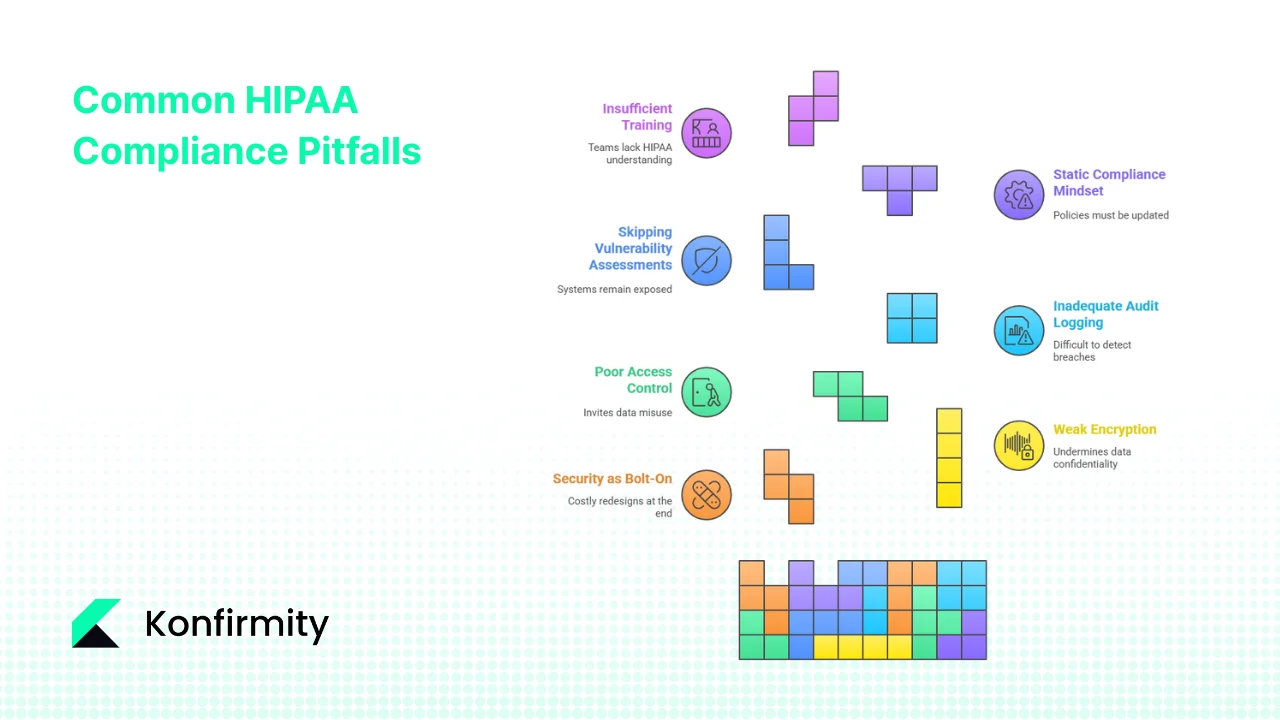

Common Pitfalls and How to Avoid Them

Many teams make similar mistakes when trying to comply with HIPAA. Here’s what we often see during audits and how to avoid these traps:

- Treating security as a bolt‑on – pushing encryption or access controls until the end of a project often results in costly redesigns. Build them into requirements and design phases.

- Weak encryption or key management – using outdated algorithms or storing keys in source code undermines confidentiality. Follow NIST guidance for cryptographic standards and manage keys in a secure vault.

- Poor access control – failing to enforce least‑privilege access or to revoke accounts when personnel leave invites misuse. Implement RBAC and perform regular access reviews.

- Inadequate audit logging – incomplete or unmonitored logs make it difficult to detect breaches or meet reporting obligations. Ensure logs capture who accessed PHI and review them regularly.

- Skipping vulnerability assessments – ignoring regular scanning or delaying remediation leaves systems exposed. Schedule periodic scans and track remediation progress.

- Static compliance mindset – regulations and attack patterns evolve; policies must be updated. Stay current with HHS guidance and adapt controls accordingly.

- Insufficient training – developers and operations teams must understand HIPAA requirements. Provide continuous education and integrate it into onboarding.

Measuring Success & Key Metrics

How do you know if your HIPAA Secure SDLC is working? Use measurable indicators tied to risk reduction and compliance. Examples include:

- PHI‑related vulnerabilities found and remediated – track counts per release and time to remediate. Aim for decreasing vulnerabilities and faster remediation cycles.

- Time to remediate – measure the interval between discovering a vulnerability and deploying a fix. Shorter times indicate a responsive security program.

- Number of access violations or audit log exceptions – watch for unauthorised access or unusual patterns. A reduction suggests effective controls.

- Coverage of secure coding review – calculate the percentage of modules reviewed using the secure coding checklist.

- Mean time to detect and resolve PHI incidents – shorter detection and resolution times indicate strong monitoring and incident response.

- Frequency of policy reviews and updates – ensure policies are reviewed annually or when regulations change.

- Training completion rate – track how many team members have completed HIPAA and security training. High completion rates reflect readiness.

These metrics feed into management reports and support continuous improvement. Organisations aiming for external attestations such as SOC 2 Type II or ISO 27001 can reuse evidence collected here across frameworks, reducing duplicated effort.

Conclusion

Integrating HIPAA requirements into the software lifecycle is not just a regulatory obligation—it’s critical for patient trust and business success. A HIPAA Secure SDLC embeds privacy, encryption, access control, risk management and incident response into every phase of development. This proactive approach is cheaper than retrofitting and delivers security that stands up under buyer due diligence, auditor scrutiny and real‑world incidents.

Teams seeking to implement this framework should assemble a cross‑functional group including compliance, engineering and operations; pick a pilot project; adopt the templates provided here; perform an initial risk assessment; and begin collecting evidence from day one. Konfirmity’s human‑led managed service has supported over 6 000 audits and can implement these controls in your stack, reducing effort from hundreds of hours to tens. Rather than manufacturing compliance artifacts, we build durable security programs so that compliance becomes a natural by‑product. Security that looks good on paper but fails during an incident is a liability. Build your program once, operate it daily, and let compliance follow.

FAQs

1) What does it mean to be HIPAA secure?

Being HIPAA secure means putting in place administrative, technical and physical safeguards to prevent unauthorised access, alteration or destruction of PHI. This includes risk assessments, policies, training, encryption, access controls and incident response. For technical safeguards, the HIPAA Security Rule requires unique user identifiers, access controls, audit controls and mechanisms to encrypt ePHI when appropriate.

2) What is the life cycle of a HIPAA system?

Systems handling PHI follow a lifecycle similar to other software but with privacy integrated: planning and requirements gathering, design, development, testing and validation, deployment and maintenance, and ongoing monitoring and improvement. Each phase must consider regulatory standards and produce evidence of compliance, such as access policies, encryption measures, audit logs and incident response plans.

3) What is a secure SDLC?

A secure software development life cycle integrates security into each phase of the development process rather than treating it as an afterthought. It includes risk assessments, secure design, secure coding, rigorous testing, controlled deployment, continuous monitoring and regular updates. When applied to healthcare, a secure SDLC ensures that ePHI is protected by encryption and access controls, that audit logs capture all activity, and that incidents are detected and managed swiftly.

4) What are the five main HIPAA rules?

The five main HIPAA rules are the Privacy Rule, which protects individuals’ PHI and specifies rights; the Security Rule, which mandates administrative, physical and technical safeguards to protect ePHI; the Breach Notification Rule, which requires notification to affected individuals and authorities when PHI is compromised; the Enforcement Rule, which defines penalties and procedures for violations; and the Omnibus Rule, which expanded liability to business associates and strengthened privacy provisions.

.svg)

.svg)

.svg)