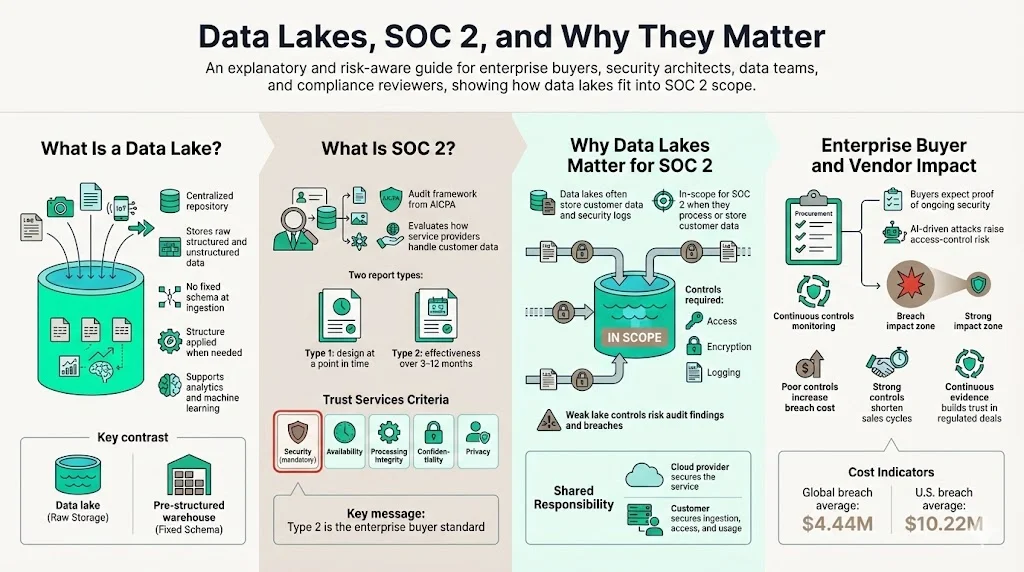

Most enterprise buyers now insist on security assurances before they sign contracts. Without operational security and continuous evidence, deals stall, even when teams think they’re ready on paper. As someone who has implemented security programs across healthcare, finance and SaaS, I’ve seen how the intersection of SOC 2 Data Lake And SOC 2 compliance can make or break an enterprise sale. A data lake is a large, flexible repository that ingests structured, semi‑structured and unstructured data.

SOC 2, meanwhile, is a framework from the American Institute of Certified Public Accountants (AICPA) that evaluates whether a service organization manages data securely. When these two worlds meet, enterprises must secure their data lakes without losing control or falling out of compliance. This article will unpack why SOC 2 Data Lake And SOC 2 matters, outline the risks and controls, and provide a pragmatic roadmap for getting audit‑ready.

What is a data lake?

Modern organizations generate volumes of data from applications, IoT devices, customer interactions and operational systems. A data lake is a centralized repository that stores this information in its raw form—structured, semi‑structured and unstructured—without imposing a fixed schema. Unlike data warehouses that require pre‑defined structures, a lake allows teams to ingest logs, images, text and streaming data and apply structure only when needed. This flexibility supports advanced analytics, machine learning and massive scalability.

What is SOC 2?

SOC 2 is an audit framework created by the AICPA to assess how service providers handle customer data. It is based on five Trust Services Criteria: Security, Availability, Processing Integrity, Confidentiality, and Privacy. The Security criterion, also known as the Common Criteria, is mandatory for every SOC 2 report and covers how an organization protects data from unauthorized access. Availability looks at whether systems are reliable and resilient. Processing integrity ensures systems work as intended without error. Confidentiality focuses on limiting access to sensitive information. Privacy examines how organizations collect, use and retain personal data.

There are two types of SOC 2 reports. A Type 1 audit evaluates the design of controls at a specific moment, verifying that policies and systems exist. A Type 2 audit tests the operating effectiveness of those controls over an observation period of three to twelve months. Enterprise buyers almost always ask for a Type 2 report because it demonstrates that controls actually work in practice, not just on paper.

Why do data lakes matter for SOC 2?

A data lake often becomes the single source of truth for an organization, storing logs, application data and customer information. Under SOC 2, any environment that processes or stores customer data or logs relevant to security controls is in scope. That means the lake, along with the pipelines that feed it, must meet the Trust Services Criteria. If teams neglect access control, encryption or logging in the lake, they risk a report qualification and, more importantly, a breach. Cloud providers like AWS, Azure and Google publish their own SOC 2 attestation reports for services such as Amazon S3, Azure Data Lake and Google Cloud Storage. While these attestations help, the enterprise is still responsible for how data is ingested, stored and accessed within the lake.

Why this matters for enterprise buyers and vendors

Security expectations are rising. IBM’s 2025 Cost of a Data Breach Report shows that the global average cost of a breach dropped to USD 4.44 million, but U.S. breaches averaged USD 10.22 million. AI‑driven attacks and shadow AI tools contributed to 16 % of breaches, with organizations lacking access controls paying the highest price. Buyers know these numbers and increasingly demand proof of continuous security. Vendors that cannot show ongoing control effectiveness lose deals or face long procurement cycles. On the other side, vendors who invest in strong controls and continuous evidence can shorten sales cycles by months and build trust with regulated industries.

Why SOC 2 Matters for Data Lakes

SOC 2 and enterprise security

SOC 2 provides assurance that a service organization protects and monitors customer data. It goes beyond checklists; auditors assess whether a company has designed controls (Type 1) and whether those controls operate effectively over time (Type 2). For data‑heavy businesses, a SOC 2 report is often required before closing enterprise deals or partnering with healthcare providers under business associate agreements.

Type 2 audits are preferred because they reflect reality. Controls must operate consistently over a minimum observation period, typically 6–12 months, and auditors look for evidence across at least 64 control points. If a vendor only has a Type 1 report, customers will question whether controls hold up during real incidents. In our experience at Konfirmity, roughly 90 % of enterprise buyers ask for a Type 2 attestation.

Does SOC 2 apply to data lakes?

SOC 2’s Trust Services Criteria apply to any system that stores or processes customer data. This includes data lakes, security data lakes used for log analytics, and the pipelines feeding them. The CloudQuery SOC 2 guide emphasizes defining the scope by identifying all systems, processes and data in scope, then implementing controls such as access control and encryption. If your lake ingests customer logs, analytics data or health records, it is in scope for SOC 2. Failing to include it in scope can lead to non‑compliance and hinder the audit.

Enterprise cloud services—like Amazon S3 or Azure Data Lake—typically carry their own SOC 2 Type 2 attestation, which demonstrates that the provider’s infrastructure meets the Trust Services Criteria. However, auditors still examine how you configure and monitor those services. The data owner is responsible for defining access policies, encrypting sensitive information, logging and responding to incidents. Leveraging the provider’s attestation reduces risk but does not absolve you of compliance obligations.

Core Security Risks in Data Lakes

Large, unstructured repositories introduce unique security risks. Tencent Cloud outlines several challenges, including unrestricted access, encryption gaps, metadata vulnerabilities, insider misuse, lack of data classification and compliance risks. Without proper governance, a lake quickly turns into a “data swamp”—an unmanaged collection of data that lacks structure, access controls and auditing. DataSunrise warns that swamps create data quality issues, hamper retrieval and pose serious compliance risks because access controls and auditing are missing.

Key risk categories to address in a SOC 2 Data Lake And SOC 2 program include:

- Unauthorized access: Without fine‑grained RBAC or identity management, analysts and engineers can see data they shouldn’t. Tencent Cloud notes that many platforms grant broad permissions by default.

- Data leakage: Encryption gaps leave data at rest or in transit readable to attackers. Poor key management compounds the problem.

- Insufficient logging: Without central logging and retention, incidents go undetected and auditors lack evidence.

- Weak encryption: Storing sensitive data unencrypted or using weak algorithms undermines the confidentiality criterion.

- Policy gaps: Lack of clear retention, classification and acceptable use policies leads to inconsistent handling of sensitive data.

- Metadata exposure and insider threats: Attackers can infer sensitive details from metadata, and insiders can misuse legitimate access.

- Compliance risks: Uncontrolled swamps often violate GDPR, HIPAA and SOC 2 requirements.

These risks tie directly to the Trust Services Criteria. Unauthorized access and insider misuse threaten Security and Confidentiality. Encryption gaps and data leakage undermine Privacy. Insufficient logging violates Processing Integrity because you cannot verify whether systems operate correctly. Policy gaps erode Availability when recovery plans are unclear. Addressing these risks is essential for SOC 2 Data Lake And SOC 2 readiness.

Building Blocks of a SOC 2‑Ready Data Lake

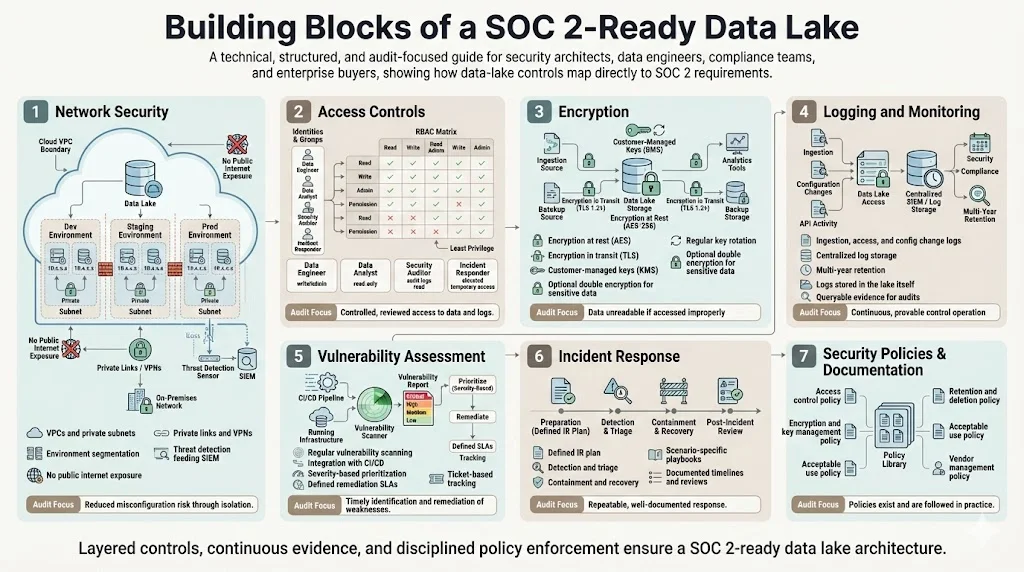

Security controls for data lakes map directly to SOC 2 criteria. Below are seven categories of controls that Konfirmity implements when building SOC 2 Data Lake And SOC 2 programs.

1) Network security

Data lakes live within cloud networks. Use virtual private clouds (VPCs), subnets and private connectivity to isolate the lake from the public internet. Implement network segmentation to separate environments (development, staging and production). The proposed HIPAA updates require network segmentation and anti‑malware protection, and the same principles apply here. To detect intrusions, deploy threat detection tools that monitor unusual traffic patterns and integrate with SIEM systems. Private link services and VPNs limit data transfer paths, reducing exposure. In Konfirmity’s experience, using VPC endpoints and private connectivity eliminates more than 80 % of misconfiguration findings during SOC 2 audits.

2) Access controls

Adopt a role‑based access control (RBAC) model with least privilege. Identity and access management tools allow you to assign roles to users and services and enforce multi‑factor authentication. The Microsoft guidance for securing Synapse connectors recommends Azure Active Directory, managed identities and key rotation. Use similar practices across cloud platforms: define roles for data engineers, analysts, and auditors; grant them only the permissions they need; and require MFA. Regularly review role assignments and use automated tools to detect privilege creep. Below is an example RBAC matrix:

This matrix is illustrative; your organization should tailor roles to specific responsibilities and enforce least privilege. Continuous reviews should be a scheduled control requirement.

3) Encryption

Encrypt data at rest and in transit. NIST‑aligned guidance recommends AES for at‑rest data and TLS for data in transit. The proposed HIPAA security rule goes further, requiring encryption of ePHI at rest and in transit and mandating multi‑factor authentication. Use customer‑managed keys stored in cloud key management services (KMS) when possible, and rotate keys regularly. Evaluate whether your provider’s default encryption meets your regulatory obligations; for highly sensitive workloads, double encryption (application‑level plus storage‑level) might be warranted. Document your key management policies and integrate key usage logs into your audit evidence.

4) Logging and monitoring

SOC 2 Type 2 audits hinge on evidence. Enable detailed event logs at every layer: data ingestion, storage, access, and configuration changes. Centralize logs in a security lake or SIEM. Microsoft’s recommendations include using Azure Monitor and Synapse Workspace logs to track access and detect anomalies. Similar capabilities exist on AWS (CloudTrail, CloudWatch) and Google Cloud (Cloud Audit Logs). Define retention policies that align with regulatory requirements—HIPAA and some state privacy laws require multiple years of retention. Use your data lake itself to store logs; this not only provides cost‑effective storage but also allows you to query logs to produce SOC 2 evidence. For example, Konfirmity sets up automated scripts to export logs to the lake and tags them by control category for easy retrieval during audits.

5) Vulnerability assessment

Regular scanning and remediation are essential. The HHS proposed rule mandates vulnerability scanning at least every six months and penetration testing annually. In practice, security teams should run automated scans weekly or monthly, depending on risk, and track findings via a ticketing system. Tools like Nessus, Qualys, or cloud‑native scanners can detect misconfigurations, unpatched software and exposed ports. Integrate these scanners with your CI/CD pipeline so that new infrastructure is scanned automatically before deployment. Document remediation workflows: assign severity (e.g., CVSS), set service‑level agreements (SLAs) for fixing high and medium vulnerabilities, and track closure. Konfirmity’s managed service enforces SLA adherence and provides monthly reports that clients can share with auditors.

6) Incident response

When a security event occurs, response must be swift and well‑documented. An incident response (IR) plan defines how to detect, triage, contain, eradicate, recover and learn from incidents. Microsoft’s guidance suggests integrating SAP system logs with Azure Monitor to detect anomalies and respond quickly. Adapt this to your data lake by feeding logs from ingestion, access control systems, and scanners into your SIEM. Define playbooks for common scenarios: unauthorized access, data exfiltration, or malware detection. The IR plan should specify roles (SOC analyst, IR lead, communications), escalation paths and notification requirements (e.g., notifying leadership and customers within 24 hours). Maintain evidence of incident handling; auditors look for runbooks, timelines and post‑incident reviews to verify that controls operate effectively.

7) Security policies and documentation

Policies translate controls into operational guidance. A SOC 2 program for data lakes should include:

- Access control policy: Defines RBAC, least‑privilege principles and review cadence.

- Encryption policy: Specifies algorithms, key management practices and rotation schedules.

- Retention and deletion policy: Outlines how long data and logs are kept, and how they are securely destroyed.

- Acceptable use policy: Describes permissible actions for employees and contractors.

- Vendor management policy: Sets requirements for third‑party risk assessments, contract clauses and monitoring, echoing Panorays’ recommendation to enforce contractual obligations around access control, encryption and incident reporting.

Well‑documented policies accelerate SOC 2 readiness. During an audit, you must provide these documents, along with evidence that they are followed. Konfirmity’s managed service includes policy templates and ensures they align with ISO 27001, HIPAA and GDPR so that controls can be reused across frameworks.

Practical Steps to Get SOC 2 Ready

Implementing SOC 2 Data Lake And SOC 2 controls is less daunting when broken into discrete steps. Below is a pragmatic sequence drawn from Konfirmity’s experience supporting 6,000+ audits and more than 25 years of combined technical expertise.

Step 1 — Define scope

Identify all data lake assets: storage buckets, databases, ingestion pipelines, processing engines and analytics tools. CloudQuery’s guide recommends starting with a scope definition to ensure you include all systems that handle customer data. Map each asset to the relevant Trust Services Criteria. For example, storage buckets map to Security, Confidentiality and Privacy; ingestion pipelines map to Processing Integrity; analytics clusters map to Availability and Processing Integrity. Don’t forget ancillary systems like code repositories and configuration management tools.

Step 2 — Gap assessment

Perform a baseline assessment of your current controls against SOC 2 requirements. Use a checklist or an automated assessment tool to evaluate whether you have network segmentation, RBAC, encryption, logging, vulnerability scanning, IR plans and policies. Identify gaps such as missing MFA, weak key management or unscanned resources. Rate each gap by risk and complexity to prioritize remediation. In our practice, the initial gap assessment typically yields 15–30 findings across a data‑lake environment.

Step 3 — Implement security controls

Using the building blocks above, implement or enhance controls. Create RBAC groups in your identity provider and assign them to storage resources. Enable encryption with customer‑managed keys and rotate them. Centralize logs and set retention periods. Deploy vulnerability scanners and integrate them with CI/CD pipelines. Use the network segmentation and encryption guidelines outlined by Panorays and HHS. Configure incident response playbooks and connect them to ticketing systems. This stage often requires cross‑team collaboration; engineers, security operations and compliance leads must work together. With Konfirmity’s support, most clients reach initial control implementation within 6–8 weeks, compared to 16 weeks when self‑managed.

Step 4 — Continuous monitoring

SOC 2 Type 2 demands evidence over time. Automate control monitoring by integrating cloud logs, IAM events, vulnerability scans and incident tickets into dashboards. Tools like AWS Config, Azure Policy, GCP Config Connector and third‑party security platforms can provide continuous drift detection. Panorays highlights the importance of continuous monitoring and automation to detect misconfigurations and respond quickly. Develop dashboards that show control status (e.g., percentage of resources with encryption enabled, number of privileged accounts without MFA, vulnerability remediation timelines). Configure alerts for control failures or unusual activity. Schedule periodic access reviews and policy updates. Continuous monitoring reduces audit effort and gives management real‑time visibility.

Step 5 — Prepare for the audit

Before inviting the auditor, assemble evidence. Auditors will request policies, risk assessments, change management records, access review logs, vulnerability scans and incident reports. Use your data lake’s logging and monitoring capabilities to produce reports that demonstrate control effectiveness. Provide diagrams of your architecture (like the one in this article) and show how controls map to each component. Conduct a mock audit with your internal or external compliance team to identify any remaining gaps. Konfirmity typically schedules the official audit 4–5 months after the program begins, reducing time‑to‑audit by half compared to self‑managed efforts.

Example Architecture

Below is a simplified architecture that illustrates how the controls integrate across a data lake environment. Raw data flows from sources into cloud object storage. IAM and RBAC restrict access. Encryption layers protect data at rest and in transit. Logs feed into a SIEM for continuous monitoring. Vulnerability scanners operate on the infrastructure. Incident response processes and an audit pipeline sit at the bottom to handle incidents and provide evidence for auditors.

Tools & Technologies

Selecting the right tools accelerates SOC 2 Data Lake And SOC 2 readiness. The table below lists examples for each control category. Replace or expand these with equivalents from your stack.

Real‑World Examples

Example A: Multi‑tenant RBAC model. A SaaS provider built a central data lake for log analytics. Without RBAC, analysts from one customer could see another customer’s logs. Konfirmity designed a multi‑tenant RBAC model using AWS IAM roles, lake formation resource policies and attribute‑based access control. We created roles for each tenant and restricted access at the table and column level. After implementation, the provider passed its SOC 2 Type 2 audit, and the average access‑review time dropped from two days to two hours.

Example B: Logging pipeline for audit evidence. A healthtech company needed a SOC 2 Type 2 report to close hospital contracts. They already used AWS S3 and Athena as their data lake but lacked centralized logging. We configured CloudTrail and CloudWatch Logs to capture API calls, configuration changes and IAM events, then ingested those logs into a dedicated audit bucket. Queries against the audit bucket produced evidence for change management, access reviews and incident response. The auditor commented that the evidence was among the most complete they had seen.

Example C: Incident response triggered by unusual access. A financial services firm detected repeated failed login attempts to their data lake. The SIEM flagged the activity, and the incident response playbook automatically invoked an isolation procedure. The team reviewed logs, identified a compromised API key and rotated it immediately. The incident took less than 30 minutes to contain. During the SOC 2 audit, the firm provided the incident report and root‑cause analysis, demonstrating that controls operated effectively over time.

Conclusion

Security that looks good in documents but fails under pressure is a liability. Enterprise buyers and regulators expect evidence of continuous control operation. A data lake can drive innovation and insight, but it also concentrates risk. By focusing on SOC 2 Data Lake And SOC 2 controls—network security, access control, encryption, logging, vulnerability management, incident response and policy governance—you can make your lake a cornerstone of trust rather than a compliance burden. Following the steps outlined here, backed by real metrics and sources, will help you shorten sales cycles, satisfy auditors and protect customer data.

FAQs

1. Does SOC 2 apply to data lakes?

Yes. SOC 2 covers any system that processes or stores customer data. If your data lake ingests logs, analytics data or personal information, it must be included in the audit scope. The CloudQuery guide advises defining scope and mapping assets to the Trust Services Criteria.

2. What security controls are required for data lakes?

Core controls include network segmentation, role‑based access control with MFA, encryption of data at rest and in transit, centralized logging, regular vulnerability scanning, incident response procedures and documented policies.

3. How do auditors assess data lake access?

Auditors review access control policies, role assignments and evidence of least‑privilege enforcement. They examine logs to confirm that privileged actions are logged and that access reviews are performed regularly. Data must be classified and protected at the appropriate level.

4. What logging is expected for SOC 2?

Auditors expect logs that show who accessed data, when configurations changed and how incidents were handled. Use native cloud logging services (CloudTrail, CloudWatch, Azure Monitor) and ingest logs into a centralized data lake for long‑term retention and analysis. Policies should specify retention periods and deletion methods.

5. How is encryption tested during audits?

Auditors verify that encryption is enabled on storage resources and that data is transmitted over secure protocols (TLS). They review key management policies, key rotation schedules and evidence of encryption at rest and in transit. For regulated industries like healthcare, the proposed HIPAA rule makes encryption and MFA mandatory, so auditors will pay particular attention to these controls.

.svg)

.svg)

.svg)