Most large procurement teams now ask for proof of operational security before they sign. Contracts come with security addenda, questions about audit reports and demands for continuous logs. This environment has been shaped by rising breach costs, new privacy rules and the expansion of remote work. The IBM 2025 Cost of a Data Breach Report shows the global average cost at USD 4.44 million, with US incidents exceeding USD 10 million and 16 percent of breaches involving attackers using machine learning. At the same time, the CBIZ 2024 SOC benchmark study found that 15 percent of SOC 2 reports took more than 100 days to issue and that incomplete evidence and user access issues were leading causes of delay. The message for vendors selling to enterprises is clear: provide continuous evidence of security, or deal stall. In this article we unpack how SOC 2 Evidence Review Cadence influences procurement.

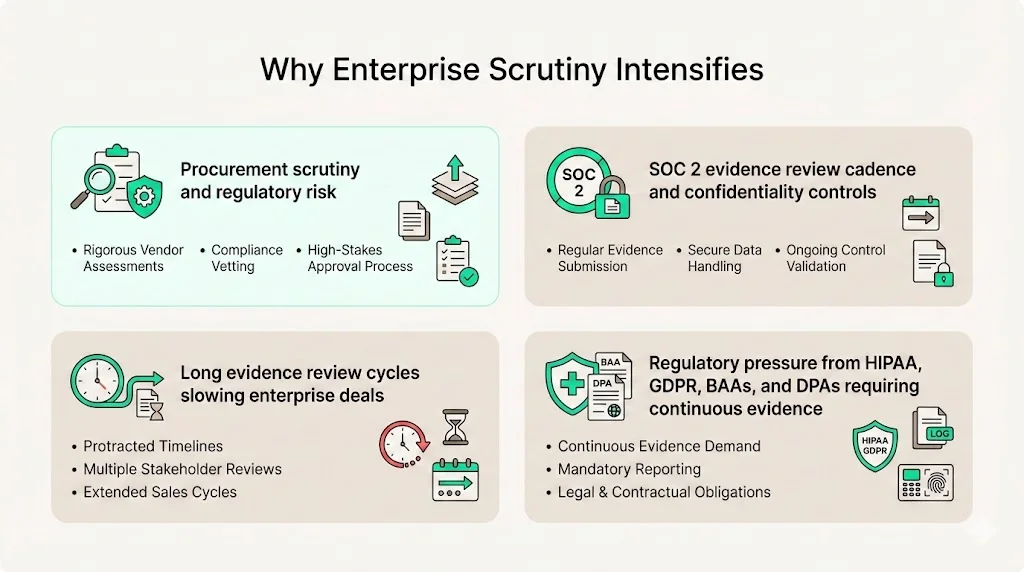

Why scrutiny intensifies when selling to enterprise clients

SOC 2 Evidence Review Cadence is often the measure used by procurement teams to evaluate vendors. Enterprise procurement functions evaluate vendors through a lens of regulatory risk and reputational exposure. Data from CBIZ shows that confidentiality was included in 64 percent of SOC 2 reports in 2024, up from 34 percent the prior year. Procurement teams see proof of confidentiality controls as essential. They also care about the timing and completeness of evidence. When a vendor’s evidence review schedule is unclear, buyers may view the program as immature and require additional assurances. Long review cycles slow down deals—CBIZ found that some reports remained open for over three months. Recent research shows that a significant share of breaches exploit advanced automation, and most of those incidents lacked proper access controls. Enterprise buyers therefore want assurance that vendors regularly check logs, permissions and incident tickets.

Regulatory developments heighten pressure. In the US, the Health Insurance Portability and Accountability Act (HIPAA) requires administrative, physical and technical safeguards for electronic protected health information. The European Union’s General Data Protection Regulation (GDPR) demands data minimisation, purpose limitation and documentation of processing activities. To demonstrate readiness under these frameworks, vendors must collect and review evidence continuously, not just before an audit. Vendors that operate in healthcare also sign Business Associate Agreements (BAAs) or Data Processing Addenda (DPAs) that require them to conduct risk assessments and maintain logs. Enterprise buyers expect SOC 2 Type II reports as part of this diligence because they show controls operating effectively over time.

Why evidence review cadence is a common failure point

Without a clear SOC 2 Evidence Review Cadence, many organisations stumble. Many organisations focus on collecting evidence items but neglect to define how often they will review them. Evidence review cadence refers to the rhythm at which teams examine logs, access reports, vulnerability scans and other evidence to verify that controls are working as intended. In practice, this cadence is where audits often fail. DSALTA’s playbook on SOC 2 suggests a monthly cycle: week 1 is for control reviews, week 2 for evidence collection, week 3 for internal spot audits and week 4 for remediation and a wrap‑up. Teams using such a cycle reported 40 percent faster audit completion and a 60 percent reduction in manual preparation due to automation. In contrast, teams that attempt to review everything at once or rely solely on year‑end preparation leave gaps. The CBIZ benchmark found that user access reviews were the top reason for qualified opinions. These issues arise because teams either fail to schedule access reviews or do them in a cursory manner.

Evidence review cadence also breaks down when evidence collection and review are conflated. Sprinto’s audit‑readiness guide warns that mixing these stages leads to stale evidence: when teams gather and review at the same time, they risk using outdated logs or missing critical events. Without a defined cadence, controls may drift, causing auditors to flag missing or backdated reviews. Auditors are trained to look for timing, consistency and proof, not just the existence of documents.

This article will clarify what SOC 2 Evidence Review Cadence means, show how it fits into the broader audit process, and offer practical templates. We will discuss the difference between evidence review and control testing, explain how cadence ties into SOC 2 Type I versus Type II, and provide examples of daily, weekly, monthly, quarterly and annual schedules. We will also map cadence to the five Trust Services Criteria and outline how to build an audit‑ready schedule. Throughout, we will draw on guidance from the American Institute of Certified Public Accountants (AICPA) and on patterns from Konfirmity’s delivery work across more than 6,000 audits.

What this cadence means

SOC 2 Evidence Review Cadence is the planned frequency at which a company examines collected data to verify that each control is functioning. It is part of the broader monitoring process defined by AICPA. Under the SOC 2 framework, monitoring activities (CC4) require management to evaluate controls continuously. Cadence fills this requirement by specifying “when” and “how often” evidence is reviewed.

How cadence fits into the audit process

The SOC 2 audit process has three phases: planning, fieldwork and reporting. During planning, the auditor and the service organisation agree on the scope, the control objectives and the observation period. For a Type I audit, the observation period is a single date; for a Type II, it is typically three to twelve months. Evidence collection occurs throughout the observation period, while evidence review occurs at scheduled intervals to confirm that control activities have taken place. Control testing is the auditor’s responsibility; evidence review is management’s. A well‑defined cadence ensures that by the time the auditor arrives for fieldwork, there is a record of periodic reviews and any issues have been addressed.

Collecting evidence versus reviewing it

Collecting evidence means capturing raw evidence items—log exports, access change tickets, vulnerability scan results, training attendance records. Reviewing evidence means examining those evidence items to confirm that they meet the control requirements. Ostendio’s playbook for SOC 2 readiness emphasises this distinction: evidence review creates documentation that supports each control, while control testing evaluates whether technical and administrative controls operate effectively. Sprinto adds that reviewing evidence requires sampling and analysis rather than merely storing files.

Why auditors focus on timing, consistency and proof

Auditors look past the presence of documents. They inspect timestamps, reviewer names and comments. They check that reviews were performed at the frequency promised in the control description. NIST Special Publication 800‑137 explains that monitoring frequencies should be matched to system criticality and that plans should be updated when conditions change. Auditors want to see that management adjusted frequency when risk increased and that evidence review was not performed retroactively. Tools that automate evidence collection can help, but they don’t remove the need for human oversight. Linford & Company points out that while automation reduces manual effort, auditors must remain independent and validate data to avoid conflicts.

Why cadence matters to enterprise buyers

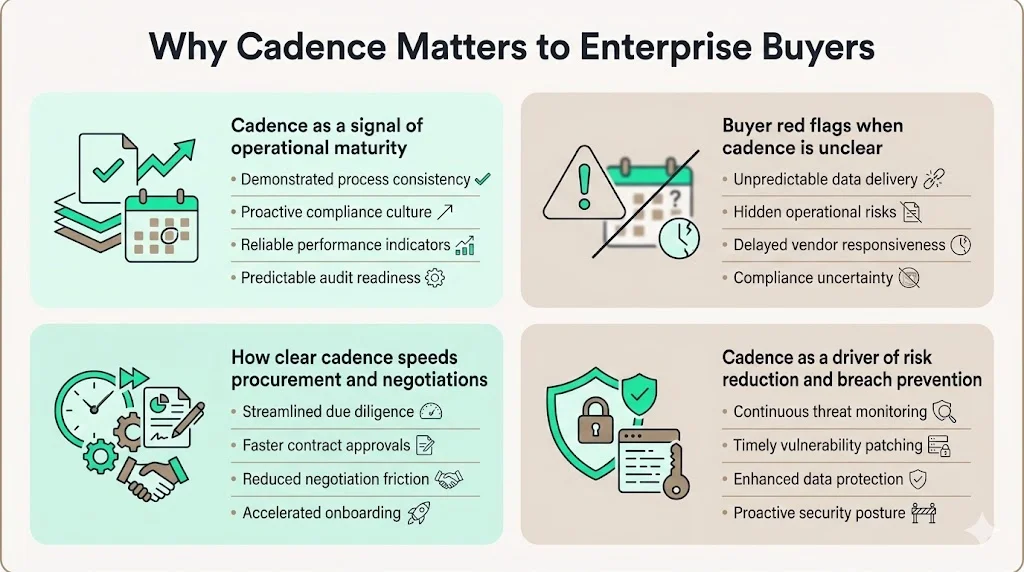

For enterprise procurement teams, SOC 2 Evidence Review Cadence signals operational maturity. Enterprise buyers evaluate vendors against risk management frameworks. In request‑for‑information (RFI) or vendor assessments, they ask for SOC 2 reports, ISO 27001 certificates or HIPAA assessments. They look for ongoing compliance monitoring rather than point‑in‑time documents. A consistent evidence review cadence demonstrates that a vendor runs a living security program. Without it, procurement teams may flag the vendor as high risk, extend due diligence, or require contractual commitments such as right‑to‑audit clauses.

Red flags buyers spot when cadence is unclear include backdated reviews, inconsistent logs, and missing reviewer signatures. These issues suggest that the program is compliance‑driven rather than risk‑driven. In our experience at Konfirmity, deals have stalled because vendors could not show that they performed access reviews throughout the year. When cadence is documented and clear, buyers gain confidence that the vendor’s controls operate daily, not just for the audit. This shortens security questionnaires and accelerates negotiations.

Establishing cadence also supports risk management. For instance, reviewing log events weekly helps catch anomalies early. Doing vulnerability scans weekly or monthly ensures that patches are applied within service agreements (SLAs). Quarterly risk assessments allow management to adjust controls. Without cadence, control drift increases and the probability of undetected misconfigurations rises. For enterprise buyers, the link between regular evidence review and reduced breach risk is intuitive. Research shows that most automation‑driven breaches lacked proper access controls; a vendor that shows quarterly access reviews demonstrates a proactive stance.

Evidence review versus control testing

Distinguishing SOC 2 Evidence Review Cadence from control testing is critical. One of the most common mistakes teams make is treating evidence review and control testing as the same activity. Evidence review is management’s ongoing check to ensure that control activities occurred. Control testing is the auditor’s independent evaluation of whether controls are designed appropriately and operating effectively. The difference matters because mixing them can cause independence issues and lead auditors to question the reliability of evidence.

What counts as evidence review

Evidence review includes verifying that access changes followed the least‑privilege process, confirming that vulnerability scans ran and that critical findings were remediated, inspecting incident tickets to ensure proper classification and closure, and confirming that vendor assessments were performed. Each review should include a date, the reviewer’s name, the scope of the review and any findings or remediations. The review can be documented in a ticket, spreadsheet or an audit tool.

What counts as control testing

Control testing is broader and is performed by the auditor or internal audit function. It involves inspecting design and operating effectiveness through sampling and testing. For example, the auditor may select ten access changes from the observation period and trace them to evidence that the least‑privilege process was followed. They may check logs to see that alert thresholds are set correctly. Evidence review supports control testing by providing the underlying documentation, but the two are distinct. Ostendio emphasises that evidence review is separate from control testing.

Mistakes from mixing the activities

Mixing evidence review with control testing often leads to two outcomes. First, teams delay reviews until right before the audit, then attempt to retrospectively review months of logs. This results in backdated entries and incomplete sampling. Second, teams use auditor test results as a substitute for ongoing evidence review. This reduces independence and can be flagged by auditors. The solution is to build a routine schedule for evidence review and regard control testing as an independent assurance activity.

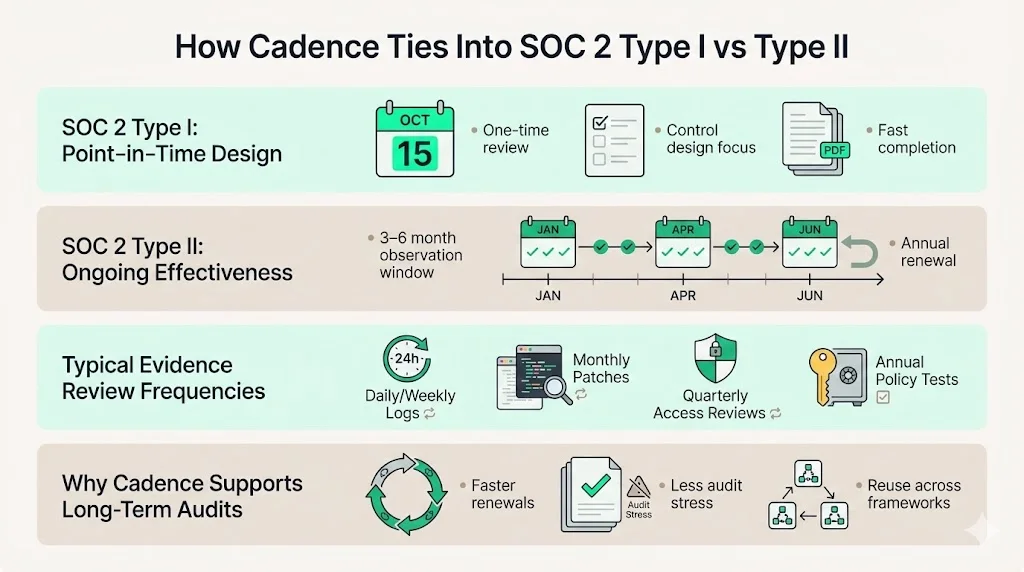

How cadence ties into SOC 2 Type I versus Type II

The SOC 2 Evidence Review Cadence depends on whether you pursue Type I or Type II. SOC 2 Type I reports evaluate the design of controls at a single point in time. There is no requirement to show operating effectiveness over a period. However, evidence review still matters because it supports management’s assertion about control design. A Type I report can be completed relatively quickly, often within a month of control implementation. In contrast, Type II reports assess both design and operating effectiveness over an observation period. I advise at least three to six months of evidence for a meaningful Type II evaluation and states that annual renewals are typical. Clients often ask for six months of data because it allows auditors to sample across multiple cycles (e.g., several access reviews or patch cycles).

One‑time versus ongoing review

In a Type I context, evidence review may be a one‑time event: management reviews all controls and collects evidence to show they have been designed. There is no requirement for periodic review. However, if a company plans to upgrade to a Type II, building cadence early is wise. For Type II, evidence review must be ongoing: weekly for logs, monthly for patches, quarterly for risk assessments. The audit opinion will cover the entire observation period, so any gap in evidence review can result in a qualification.

Typical review periods auditors expect

Auditors expect review frequencies to match risk. For high‑risk areas such as log monitoring and access control, daily or weekly reviews are common. Vulnerability scanning may be weekly or monthly, depending on the environment. User access reviews are typically monthly or quarterly, depending on the size of the organisation. Policy reviews and business continuity tests are often annual. NIST suggests adjusting the frequency to system criticality and adjusting when necessary. Auditors will compare the stated cadence in the control description with the evidence presented. If the control says “weekly access reviews” and only monthly evidence exists, the auditor will record the deviation.

How cadence supports long‑term security assessments

SOC 2 Type II audits are renewed annually. Building cadence supports these renewals by ensuring that evidence continues to accumulate and that controls remain effective. Without cadence, teams often scramble each year to prepare for the audit, causing stress and increasing the chance of missed issues. A routine cadence turns compliance into part of daily operations, reducing overhead. DSALTA’s playbook found that organisations with a monthly evidence review cycle completed audits 40 percent faster. Maintaining cadence also helps when expanding into additional frameworks like ISO 27001 or HIPAA because the same evidence can support multiple control objectives.

Common evidence review cadences (with examples)

A practical cadence is customized to a company’s risk profile, but typical patterns appear across many audits. Below are examples of daily through annual reviews. These examples come from our delivery work and follow guidance from AICPA, NIST and industry practice.

Daily and continuous reviews

- Log monitoring: Examine security information and event management (SIEM) alerts daily to identify suspicious activities. For example, check failed login attempts, abnormal access times or unusual data transfers. Document any investigations and remediation.

- Access changes: Review provisioning and de‑provisioning tickets daily to ensure that new users have appropriate permissions and departing users are removed promptly. Flag any exceptions for remediation.

- Security alerts: Respond to and document alerts from intrusion detection systems (IDS), endpoint detection and response (EDR) tools and anti‑virus software as they occur. Confirm that alerts are triaged within the defined SLA.

Continuous reviews may involve automated dashboards that update in real time. However, human oversight is necessary to interpret alerts and verify that the system is functioning. DSALTA points out that automation can reduce manual preparation by up to 60 percent, but only when integrated into the review cadence.

Weekly reviews

- Vulnerability scans: Run vulnerability scanners and review results weekly. Document critical findings and track remediation. For example, high‑severity Common Vulnerability Scoring System (CVSS) scores should be remediated within a defined timeframe.

- Incident tracking: Review the incident ticket queue to ensure that incidents are properly categorised, assigned and resolved. Confirm that root‑cause analyses are performed and that lessons learned are documented.

- Ticket review checks: Examine change management tickets or support tickets to detect patterns or recurring issues. Ensure that changes follow the change control process and that approval steps are documented.

Monthly reviews

- User access reviews: Compare user lists with HR records and role matrices to verify that each user’s access is justified. Remove inactive or excessive privileges. The CBIZ benchmark shows that user access reviews are a common failure point, making monthly reviews critical.

- Patch management evidence: Collect and review evidence that patches were applied within the defined SLA. Confirm that a patch management policy exists and that exceptions are documented.

- Vendor risk checks: Review vendor risk assessments and monitor third‑party SLAs. Verify that vendors handling sensitive data have updated security attestations and that contracts include appropriate clauses.

Quarterly reviews

- Risk assessments: Conduct a quarterly risk assessment focusing on changes in technology, processes or business context. Identify new threats and adjust controls accordingly.

- Policy reviews: Review security policies, procedures and standards at least quarterly to ensure they reflect current practice and regulatory requirements.

- Internal audit checkpoints: If the organisation has an internal audit function, schedule quarterly control testing to assess the effectiveness of controls and the accuracy of evidence reviews.

Annual reviews

- Business continuity testing: Conduct a full test of the disaster recovery plan and business continuity plan annually. Document results and remediation actions.

- Security training completion: Verify that all employees have completed required security awareness and privacy training. Document completion rates and follow up on any exceptions.

- Management review sign‑off: Management should review the overall security program annually, including the results of evidence reviews, risk assessments and audits. They should sign off on the program and approve any changes.

These cadences may vary based on organisational size, industry and risk appetite. It is important to set frequencies that are sustainable and matched to the criticality of each control.

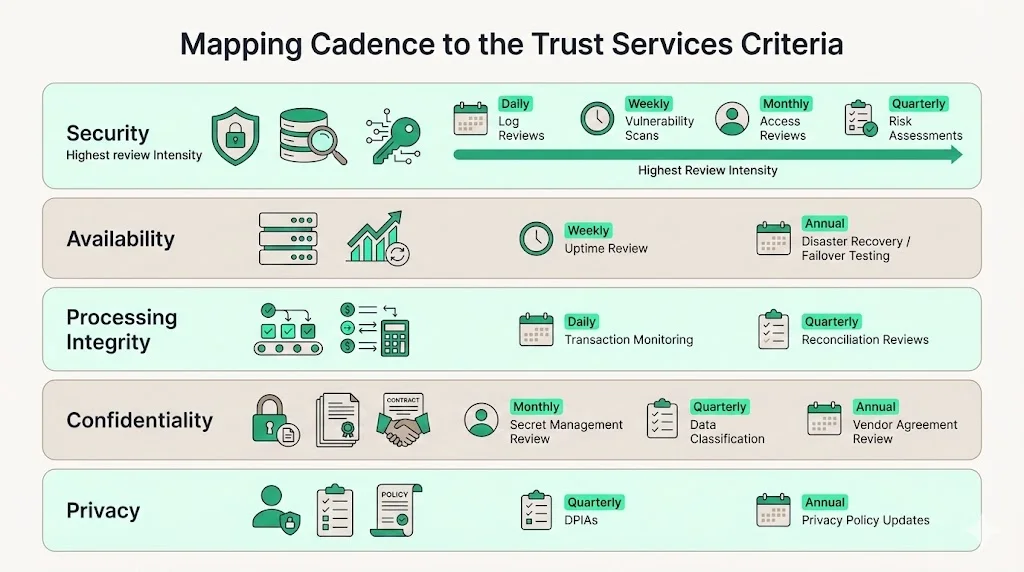

Mapping cadence to the Trust Services Criteria

SOC 2 encompasses five Trust Services Criteria (TSC): Security, Availability, Processing Integrity, Confidentiality and Privacy. Each criterion has specific controls, and cadence varies accordingly.

Security

Security controls protect against unauthorised access. Evidence review cadence here is often the most intense. Log monitoring should be daily; vulnerability scans weekly; user access reviews monthly; and risk assessments quarterly. Because security lapses can lead to immediate breaches, enterprise buyers and auditors scrutinise the cadence of these controls closely. CBIZ observed that user access issues were the primary reason for qualified opinions. Frequent reviews mitigate that risk.

Availability

Availability controls ensure that systems are up and data is accessible when needed. Evidence might include uptime monitoring logs, incident response times and disaster recovery tests. Reviews may be weekly for uptime and annually for full failover tests. For example, examine system uptime dashboards weekly and test failover annually.

Processing Integrity

Processing Integrity controls ensure that systems process data completely, accurately and on a timely basis. Evidence may include transaction logs, reconciliation reports and automated checks. The cadence could be daily for transaction monitoring and quarterly for reconciliation reviews. For example, review daily processing logs for errors and conduct quarterly reconciliations.

Confidentiality

Confidentiality controls protect sensitive information. Evidence includes encryption secret management logs, data classification reports, access control lists and vendor agreements. Reviews may be monthly for secret management, quarterly for classification updates and annually for vendor contractual reviews. The CBIZ study noted a significant rise in confidentiality coverage across reports, reflecting buyer demand for stronger confidentiality controls.

Privacy

Privacy controls govern the collection, use, retention, disclosure and disposal of personal information. Evidence may include consent records, data minimisation logs, Data Protection Impact Assessments (DPIAs) and cross‑border transfer documentation. Reviews could be quarterly for DPIAs and annually for policy updates. Privacy cadences may be less frequent than security, but they require careful documentation to satisfy GDPR and other privacy laws.

Overall, security criteria typically carry the heaviest review load because they address immediate threats. Availability and processing integrity require regular but slightly less intense review. Confidentiality and privacy demand periodic checks tied to policy and vendor management.

Building an audit‑ready evidence review schedule

To build an audit‑ready schedule, start by mapping each control to a review frequency based on risk and regulatory requirements. For example, tie log review to daily checks and user access reviews to monthly checks. Make the cadence consistent with applicable standards: HIPAA may require annual contingency plan testing, ISO 27001 may require annual internal audits and GDPR may require periodic DPIAs. Use the observation period for SOC 2 to define the starting point for each review. For Type II, ensure that each review occurs at least once during the period.

Avoiding gaps in walkthroughs

Auditors often perform walkthroughs where they trace a sample control from start to finish. They will ask when evidence was reviewed, who reviewed it and where it is stored. If there is a month without an access review or a quarter without a risk assessment, the auditor may issue a finding. Document the cadence in a schedule and assign ownership. Use reminders and calendar invites to keep the schedule on track.

Keeping cadence realistic for lean teams

Smaller teams may struggle to review evidence weekly or monthly. In our work at Konfirmity, we customize cadences based on team capacity. For example, a company with ten employees and a limited infrastructure may perform access reviews quarterly instead of monthly. It is important to justify the frequency based on risk and to ensure that each control receives at least one review during the observation period. Automation can offload repetitive tasks, but human review remains essential.

Evidence review cadence template

A simple table can help organise the evidence review cadence. Below is a template with typical columns and an example row.

This table can be extended to include all controls. Auditors evaluate each column. They confirm that the control name matches the control description, the evidence type is appropriate, the review frequency matches with risk, the reviewer role has appropriate authority, the storage location preserves evidence integrity and the proof of review contains timestamps and signatures. Keeping the table up to date ensures that no control is overlooked. DSALTA’s monthly cycle can be captured here by listing each weekly activity across the month.

Evidence collection and storage best practices

Centralising evidence is critical. Store evidence in a secure repository that offers access control, versioning and audit trails. Avoid scattering files across personal drives or email threads. Each piece of evidence should have a consistent naming convention, such as controlname_yearmonth_description to simplify retrieval. Version control allows teams to track updates and maintain a history. Auditors appreciate organised evidence because it reduces time spent searching during fieldwork.

Timestamps are vital. When exporting logs or reports, include the date of export in the file name and ensure that the system generating the evidence is synchronised to a reliable time source. This prevents disputes about when evidence was captured. For example, when collecting SIEM logs, export them with the range 2025‑01‑01_to_2025‑01‑07 and store the file in a folder labelled logs/weekly. Use write‑once storage or systems with access control to prevent tampering.

Naming conventions should be descriptive. Use consistent abbreviations for systems and controls. For example, HRIS_access_review_Q1_2025.xlsx clearly identifies the system, the control and the period. Avoid ambiguous names like Report1.xlsx. Well‑named files allow reviewers and auditors to trace evidence quickly.

Organised evidence supports financial reporting and traceability. While SOC 2 focuses on service organisation controls, the same evidence may feed into SOC 1 (internal control over financial reporting). Maintaining traceable and labelled evidence aids both audits, reducing duplication of effort.

Assigning ownership and accountability

Evidence review requires clear ownership. Each control should have an assigned reviewer who understands the control’s purpose and has the authority to act on findings. For user access reviews, the reviewer might be a security lead or manager with no direct involvement in provisioning to ensure segregation of duties. Separation of duties prevents conflicts and reduces the risk of self‑review. The CBIZ report emphasises that reviewers should not test their own access.

Small teams may need creative approaches to maintain separation. For example, the CTO may own infrastructure but delegate evidence review to a lead engineer. Alternatively, an external managed service provider, such as Konfirmity, can perform reviews. Regardless of structure, assign roles in writing and ensure everyone knows their responsibilities.

Document reviewer responsibilities clearly. Include the review frequency, the scope of the review, the steps to perform and how to document findings. This reduces ambiguity and ensures continuity if the reviewer is unavailable.

Tools and automation (with caution)

Tools can collect evidence and send reminders, but they do not replace human judgment. Automation is useful for exporting logs, running scans, and tracking ticket closure. DSALTA’s playbook attributes up to a 60 percent reduction in manual effort to automation. However, tools must be configured correctly. Linford & Company warns that evidence automation tools require careful integration; auditors still need to validate data and remain independent. Over‑reliance can lead to blind spots—for example, an automated access review might not detect privileges assigned through ad‑hoc scripts.

Use automation to schedule reviews, collect evidence and generate reports, but ensure that a person reviews the outputs. When choosing tools, evaluate features such as support for multiple frameworks (SOC 2, ISO 27001, HIPAA), secure storage, role‑based access control and audit logging. Avoid vendors that promise “compliance in two weeks” without acknowledging observation periods and operational effort.

Common cadence mistakes that delay reports

Several patterns repeatedly cause delays in SOC 2 audits:

- Backdated reviews: Teams attempt to perform reviews retrospectively to satisfy auditors. Auditors will review timestamps and may deem these reviews invalid.

- Missing reviewer proof: Evidence lacks signatures or comments from the reviewer. Auditors must see who performed the review and when.

- Inconsistent timing: Reviews are completed irregularly—sometimes weekly, sometimes monthly—without rationale. Auditors expect consistency.

- Overly aggressive schedules: Teams set daily or weekly reviews for all controls, then fail to maintain them. It is better to set a realistic cadence and meet it than to overcommit and miss.

Avoid these mistakes by setting achievable frequencies, using templates and calendars, and reviewing the schedule quarterly to adjust as the environment changes.

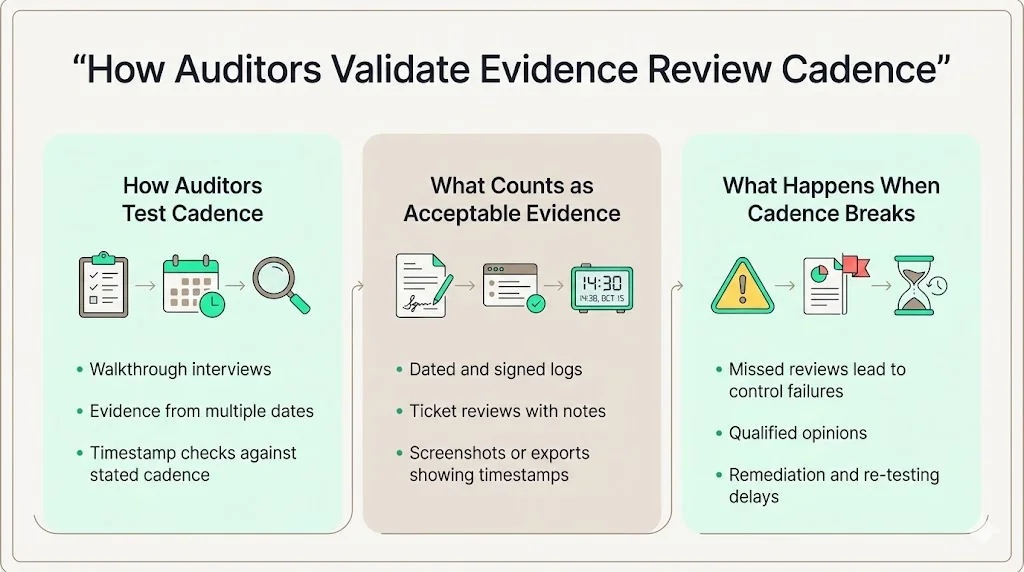

How auditors validate evidence review cadence

Auditors validate cadence through document review and interviews. During walkthroughs, they ask the reviewer to explain the process and show evidence from multiple dates. They test the timing by comparing timestamps on logs, tickets and review sign‑offs with the stated cadence. Acceptable proof includes dated and signed logs, ticket reviews with comments, and screenshots or exports with embedded timestamps. NIST suggests event‑driven assessments outside the scheduled cadence; auditors may ask if management performed additional reviews after major incidents.

If cadence reviews fail—for example, if an access review was missed—the auditor may issue a qualified opinion or record a control failure. This can impact the final SOC 2 report and, by extension, the vendor’s credibility. Buyers may request remediation and re‑testing, delaying deals. Maintaining a strong cadence reduces this risk and demonstrates discipline.

Conclusion

Evidence review cadence is not just paperwork; it is a sign of operational discipline. Vendors selling to enterprise clients must demonstrate that controls are reviewed regularly, not just collected. A clear cadence simplifies audits, reduces the chance of findings and accelerates deals. The rise in breach costs and the prevalence of attacks using advanced automation show why continuous review is essential. SOC 2 Type II audits and similar frameworks demand sustained evidence over months or years. By implementing a realistic, risk‑based cadence and documenting every review, companies can build trust with buyers and auditors. Security programs that work under incident pressure are more valuable than those that look good on paper.

FAQs

1) What is the review period for SOC 2?

For SOC 2 Type I, the review period is a single date. For SOC 2 Type II, auditors typically examine evidence over a three‑ to twelve‑month observation window. Drata advises at least three to six months to make the report meaningful. The exact period depends on risk, but most enterprise buyers prefer six months to a year. During that window, management must perform evidence reviews at the stated cadence.

2) How frequently should SOC 2 Type II audits be performed?

SOC 2 Type II audits are usually conducted annually. Each audit covers a new observation period and requires fresh evidence. Because the evidence review cadence must run throughout the year, planning should start early. DSALTA’s playbook reports that organisations with a monthly cadence completed audits 40 percent faster. Starting the cadence at the beginning of the year avoids year‑end rush.

3) What are the five criteria for SOC 2?

The Trust Services Criteria include Security, Availability, Processing Integrity, Confidentiality and Privacy. Security addresses protection against unauthorised access; Availability ensures systems are operational; Processing Integrity ensures data is processed accurately and timely; Confidentiality protects sensitive information; and Privacy governs personal information. Cadence varies across criteria—security requires daily and weekly reviews, while privacy may involve quarterly or annual checks.

4) What is a SOC 2 Type 2 review?

SOC 2 Type 2 (often written as “Type II”) is an audit that assesses the design and operating effectiveness of controls over an observation period. It differs from Type I, which assesses design at a point in time. A Type 2 review involves collecting evidence across the observation period and demonstrating that controls operated consistently. Cadence plays a central role because it ensures there are periodic reviews to support the auditor’s sampling. Without a defined cadence, a Type 2 audit is likely to result in findings or qualifications.

.svg)

.svg)

.svg)