In today’s healthcare sector, cyber‑attacks and regulatory scrutiny are accelerating. 2025 alone saw nearly 500 breaches affecting more than 37.5 million individuals in the United States, with providers accounting for 76% of incidents and the average breach impacting 76,000 people. IBM’s 2025 Cost of a Data Breach report found healthcare remained the costliest industry for the fourteenth consecutive year, with an average breach cost of USD 7.42 million and detection taking 279 days. Against this backdrop, the HIPAA Breach Notification Rule has become a critical focal point for risk management and sales readiness. Investors, customers and regulators expect proof that security controls are operational, not just documented. As the founder of Konfirmity, I have seen how breaches stall deals and damage trust when companies cannot demonstrate compliance.

This HIPAA Breach Notification Guide synthesises legal requirements and practical experience from 6,000+ audits over 25 years of collective expertise at Konfirmity. It is written for CTOs, CISOs, engineering leaders and compliance officers who need actionable guidance on protecting patient data, meeting breach‑notification timelines and building lasting security programs. The article explains key definitions, outlines step‑by‑step response procedures, details reporting obligations and fines, and shows how robust security controls across frameworks (SOC 2, ISO 27001, HIPAA and GDPR) reduce both breach risk and operational workload. Throughout, the term HIPAA Breach Notification Guide is used deliberately to aid search intent without cluttering the narrative.

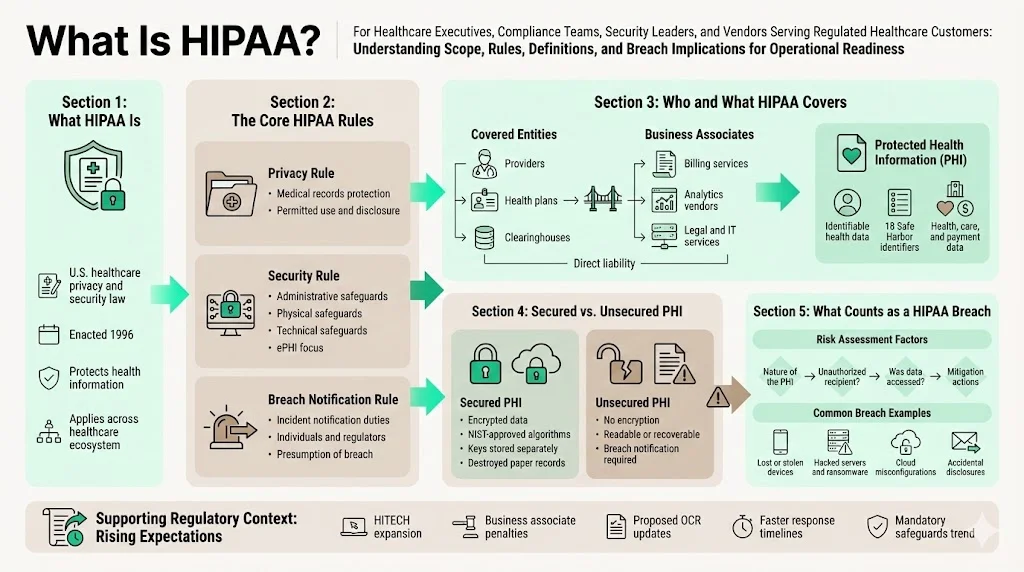

What is HIPAA?

The Health Insurance Portability and Accountability Act of 1996 is the cornerstone of U.S. healthcare privacy and security law. HIPAA comprises several rules:

- Privacy Rule (45 CFR Part 160, Subparts A and E of Part 164) – sets national standards for protecting individuals’ medical records and other individually identifiable health information.

- Security Rule (45 CFR Part 160, Subparts A and C of Part 164) – requires administrative, physical and technical safeguards to protect electronic protected health information (ePHI). Organisations must identify where ePHI resides, assess threats and vulnerabilities, and implement security measures accordingly.

- Breach Notification Rule (45 CFR §§ 164.400 -- 414) – mandates that covered entities and business associates notify affected individuals, the U.S. Department of Health and Human Services (HHS) and, in some cases, the media after certain breaches of unsecured PHI. The rule creates a presumption that any impermissible use or disclosure of PHI is a breach unless the organization demonstrates a low probability that the data has been compromised based on a risk assessment.

The 2009 HITECH Act expanded the Breach Notification Rule to business associates and introduced tiered civil penalty structures. A proposed overhaul issued by OCR in January 2025 (expected to be finalised in 2026) would eliminate “addressable” implementation specifications, making most security safeguards mandatory, and shorten incident‑response timelines. The NPRM also proposes requiring written incident response and disaster‑recovery plans capable of restoring critical systems within 72 hours, comprehensive asset inventories, multi‑factor authentication and annual verification of business associates’ controls. These proposals mirror SOC 2 and ISO 27001 expectations for continuous monitoring and could further raise compliance stakes.

Purpose of the Breach Notification Rule

The Breach Notification Rule has two core aims:

- Protect patient rights and ensure transparency – Individuals must be notified promptly when their information is compromised so they can take protective actions such as credit monitoring or fraud alerts.

- Encourage rapid response – Covered entities and business associates are required to act quickly to contain incidents, assess risks and report breaches within defined timelines (typically 60 days from discovery). Prompt reporting not only limits harm but also demonstrates good faith to regulators and customers.

Key legal definitions

Covered entities vs. business associates – Covered entities include health care providers, health plans and clearinghouses. Business associates are persons or entities that perform functions involving access to PHI on behalf of a covered entity; examples include billing services, data‑analysis firms, claims processors and legal services. Business associates are directly liable for certain HIPAA requirements and must notify covered entities of breaches.

Protected Health Information (PHI) – PHI is individually identifiable health information that relates to an individual’s past, present or future physical or mental health, provision of health care or payment for health care, and can be linked to a specific person through an identifier. The Safe Harbor method enumerates 18 identifiers that must be removed to de-identify data. These include names, all geographic subdivisions smaller than a state, all elements of dates (except year) relating to an individual, phone numbers, email addresses, social security numbers, medical record numbers, health plan numbers, account numbers, certificate/license numbers, vehicle and device identifiers, URLs, IP addresses, biometric identifiers, full‑face photos and any other unique identifying code.

Unsecured vs. secured PHI – The Breach Notification Rule applies only to breaches of unsecured PHI. PHI is considered secure when it is rendered unusable, unreadable or indecipherable to unauthorized persons through encryption or destruction. HHS guidance states that ePHI is secured if encrypted using algorithms certified by NIST (e.g., FIPS 140‑2 for storage, TLS/SSL for data in transit) and keys are stored separately from data. Paper records must be shredded or otherwise destroyed so they cannot be reconstructed.

What counts as a “data breach” under HIPAA?

A breach is “the acquisition, access, use or disclosure of PHI in a manner not permitted” by the Privacy Rule that compromises the security or privacy of the information. The rule presumes a breach when an impermissible disclosure occurs, unless a risk assessment demonstrates a low probability that the data has been compromised. The risk assessment must consider four factors:

- Nature and extent of the PHI – sensitivity of the data elements, such as whether they include full medical records or only a subset.

- Unauthorized person – the identity and authority of the unauthorised person who received or viewed the PHI.

- Whether the PHI was actually acquired or viewed – for example, if a laptop is stolen but is later recovered and forensic analysis shows it was not accessed.

- Extent to which the risk has been mitigated – such as through encryption, destruction, or obtaining signed assurances that the recipient will not further disclose the information.

Examples of breaches include:

- Lost or stolen devices – unencrypted laptops, USB drives or smartphones with patient records. According to our audits, many breaches stem from portable media lacking encryption. NIST SP 800‑111 underscores that encryption for mobile devices is essential for safeguarding data at rest.

- Hacked servers and ransomware – attackers gain access to servers hosting EHR systems or billing platforms. The BST & Co. and Deer Oaks settlements highlight that failure to conduct a risk analysis and maintain technical safeguards can lead to significant penalties.

- Accidental disclosures – sending PHI to the wrong recipient, posting medical records publicly or misconfiguring cloud storage. Even unintentional events can trigger notification obligations if the data is not encrypted.

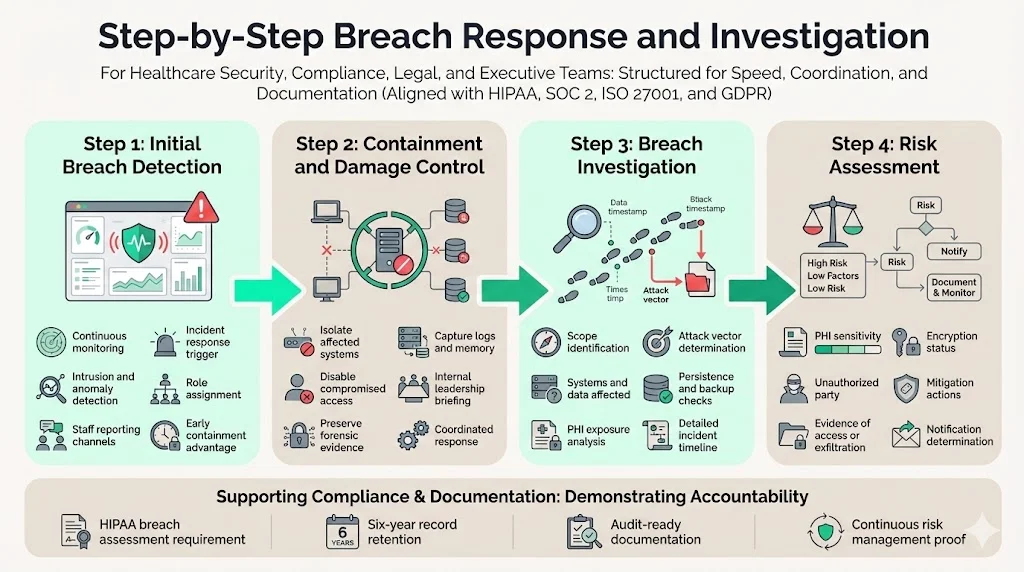

Step‑by‑Step Breach Response and Investigation

Managing a breach requires speed, precision and coordination across legal, security and communications teams. Over thousands of incidents, we’ve refined a four‑stage approach that aligns with HIPAA, SOC 2, ISO 27001 and GDPR requirements.

Step 1: Initial breach detection

Early detection reduces harm and containment time. Organisations should:

- Monitor systems continuously – Deploy intrusion detection, logging and anomaly‑detection tools across networks, cloud services and endpoints. IBM research shows that organisations leveraging automation reduce breach costs by $1.76 million and shorten the breach lifecycle by 108 days.

- Encourage reporting – Staff should know how to report suspicious activities. Human‑led security programs emphasise open communication between IT, compliance and clinical teams.

- Trigger incident response protocols – Immediately alert the incident response team. On‑call responders should verify the report, start documenting events and assign roles.

Step 2: Containment and damage control

Once a breach is suspected:

- Isolate affected systems – Disconnect compromised servers, revoke compromised credentials and disable external connections to prevent further unauthorised access.

- Preserve evidence – Capture logs, memory dumps and network traffic. This information is vital for forensic analysis and required documentation.

- Communicate internally – Brief executive leadership, legal counsel and compliance officers. Transparency builds trust and ensures coordinated decision‑making.

Step 3: Conducting a breach investigation

An effective investigation determines what happened and guides remediation. Key steps include:

- Identify the scope – Determine which systems and data stores were accessed. Document the type of PHI exposed, the number of individuals affected and whether third‑party vendors were involved.

- Determine the attack vector – Was it phishing, credential stuffing, misconfiguration, insider misuse or a technical vulnerability? NIST SP 800‑30 advises organisations to identify threats and vulnerabilities and assess existing security measures.

- Assess forensic evidence – Work with security analysts to determine if data was actually viewed or exfiltrated, whether the attacker maintained persistence, and whether backups were affected. This influences the risk assessment and notification obligations.

- Document the timeline – Record all actions taken, from detection to containment, including who discovered the breach and when. HHS requires detailed records for audits.

Step 4: Risk assessment

The Breach Notification Rule requires assessing whether there is a low probability that the PHI has been compromised. Consider:

- Type and sensitivity of the PHI – Full medical histories pose higher risk than limited billing codes.

- Threat actor – Sophisticated, financially motivated attackers present higher risk than misdirected emails to internal staff.

- Data acquisition evidence – If logs confirm that the unauthorised party did not open files or that data was encrypted at rest, the probability of compromise decreases.

- Mitigation efforts – Immediate password resets, data deletion, or obtaining assurances from recipients may reduce the risk.

Risk assessments should be documented and retained for six years in accordance with HIPAA recordkeeping requirements. They also serve as evidence for SOC 2 and ISO 27001 audits, which require organisations to demonstrate continuous risk management.

Timely Reporting and Notification Requirements

Notification to affected individuals

Covered entities must notify individuals whose unsecured PHI has been breached without unreasonable delay and no later than 60 days from discovery. The notification must be written (by first‑class mail or electronic means if the individual has agreed to electronic notices) and include:

- A brief description of what happened (including date of breach and date of discovery).

- The types of PHI involved (e.g., full names, social security numbers, medical records, addresses). HHS emphasises that the notice should be specific to help individuals understand their risk.

- Steps individuals can take to protect themselves (e.g., credit monitoring, password changes).

- What the organisation is doing to investigate, mitigate and prevent future incidents.

If the contact information for 10 or more individuals is invalid, organisations must substitute notice by posting on their website or providing notice to major print or broadcast media. In urgent cases involving possible imminent misuse of PHI, telephone notices may be used.

Reporting to HHS

Organisations must also notify the Secretary of Health and Human Services. The timeline depends on the size of the breach:

- Breaches affecting 500 or more individuals – must be reported to HHS without unreasonable delay and no later than 60 days from discovery.

- Breaches affecting fewer than 500 individuals – may be logged and reported within 60 days after the end of the calendar year in which the breach was discovered. However, best practice is to report sooner to demonstrate transparency.

Reports are filed through the OCR portal and require details on the incident, affected individuals, steps taken and mitigation measures. In our experience, HHS often requests additional documentation, including risk assessments, policies and evidence of encryption or destruction.

Media notification

If a breach affects more than 500 residents of a state or jurisdiction, covered entities must notify prominent media outlets serving the area within 60 days of discovery. The media notice must include the same information provided to affected individuals and may be delivered via press release. This requirement ensures wide dissemination so individuals who may not receive direct notices are still informed.

Notifications from business associates

Business associates must notify their covered entity clients of breaches of unsecured PHI without unreasonable delay and no later than 60 days after discovery. Contracts should specify shorter internal timelines (such as 24 hours) to allow covered entities to meet their obligations. The NPRM would make explicit security obligations in business associate agreements, including multi‑factor authentication and incident‑notification requirements.

Documentation and Recordkeeping

Detailed documentation is essential for compliance, audits and legal defensibility. HIPAA requires covered entities and business associates to maintain records of policies, procedures, risk assessments and breach investigations for at least six years. In practice, documentation should include:

- Investigation notes and forensic reports – who discovered the breach, when and how; technical findings; evidence that determines whether data was accessed.

- Risk assessments and mitigation plans – documented evaluation of the four factors and justification for determining whether notification is required.

- Notification letters – copies of all individual, media and HHS notices sent, with dates and distribution methods.

- Policy updates and training records – revised policies addressing the breach and proof of employee training on new procedures.

Good recordkeeping not only satisfies HIPAA but also overlaps with SOC 2 and ISO 27001 evidence requirements. ISO 27001:2022 Annex A controls call for document control, incident management and audit logging; SOC 2 requires evidence of risk assessment, change management and incident response over an observation period. Using a single document repository and evidence templates streamlines compliance across frameworks and reduces audit fatigue.

Penalties and Fines for Non‑Compliance

Failure to provide timely, complete notifications can result in hefty penalties. Recent enforcement data illustrate the stakes:

- BST & Co. CPAs (business associate) agreed to a $175,000 settlement and corrective action plan after a ransomware breach exposed over 100,000 individuals’ data and the company failed to conduct a risk analysis. The corrective actions required a comprehensive risk analysis, risk management plan, updated policies and enhanced employee training.

- Deer Oaks (healthcare provider) paid $225,000 and agreed to a two‑year corrective action plan for failing to conduct a risk analysis before a breach.

- Comstar (business associate) paid $75,000 after a ransomware attack affected 585,621 individuals; OCR again cited failure to conduct an adequate risk assessment.

A 2025 client alert by Shook, Hardy & Bacon analysed OCR enforcement actions from 2024–2025. It reported that organisations paid over $9.4 million in settlements and penalties, with an average settlement of $437,545 and average civil monetary penalty of $535,466. Inadequate risk analysis was the most common violation, appearing in 13 of 20 cases. Investigations took a median of 57 months from complaint or breach to resolution, demonstrating that compliance failures can drag on for years.

OCR’s penalty structure is tiered based on culpability, ranging from $137 to $2.134 million per violation for 2025. Fines are applied per violation per year, so prolonged non‑compliance can quickly multiply liability. Settlements may cost slightly less but still require corrective action plans monitored by OCR. Beyond financial penalties, breach‑notification failures damage trust, stall sales and trigger contractual penalties under business associate agreements.

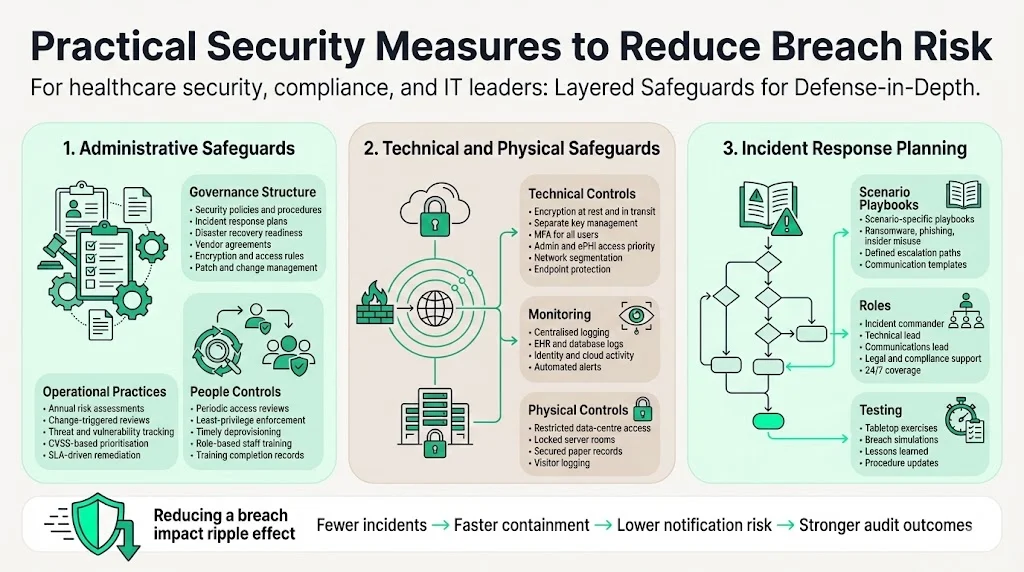

Practical Security Measures to Reduce Breach Risk

Avoiding breaches and subsequent notification obligations hinges on strong administrative, technical and physical safeguards. Based on NIST guidance and our audit experience, the following measures are critical:

1) Administrative safeguards

- Policies and procedures – Maintain up‑to‑date security policies, incident response plans, disaster‑recovery procedures, data retention schedules and vendor agreements. Policies should cover encryption, password complexity, remote work, mobile device management and patch management.

- Regular risk assessments – Perform formal security risk analyses at least annually and whenever systems or processes change. Document threats, vulnerabilities, likelihood and impact, and track remediation activities. Use CVSS scores to prioritise vulnerabilities and tie remediation to service‑level agreements (SLAs).

- Access reviews – Conduct periodic reviews of user permissions. SOC 2 and ISO 27001 require least‑privilege controls and timely deprovisioning. In our audits, access drift is a leading cause of non‑compliance.

- Employee training – Provide role‑based training on PHI handling, phishing awareness, incident reporting and new technologies like multi‑factor authentication. Training logs serve as evidence of compliance.

2) Technical and physical safeguards

- Encryption and key management – Encrypt ePHI at rest and in transit using NIST‑approved algorithms and manage keys separately. Ensure mobile devices and backups are encrypted.

- Multi‑factor authentication (MFA) – Require MFA for all accounts, particularly administrators and those accessing ePHI. The NPRM proposes mandatory MFA and removal of “addressable” status.

- Network segmentation and hardening – Segment networks to contain attacks, disable unused ports and apply standardised configurations. Deploy anti‑malware and endpoint detection and response tools.

- Logging and monitoring – Collect and retain logs from EHR systems, databases, firewalls, identity platforms and cloud services. Implement automated alerts for anomalous activity.

- Physical protections – Restrict access to data centres and server rooms, use locked cabinets for paper records, and implement visitor logs.

3) Incident response planning

- Incident response playbooks – Develop detailed procedures for different attack scenarios (ransomware, phishing, insider misuse). Include communication templates and escalation paths.

- Roles and responsibilities – Assign incident commanders, technical leads, communications leads and legal advisers. Ensure off‑hours coverage and 24/7 contact information.

- Regular drills – Conduct tabletop exercises and simulate breaches to test readiness. After each drill, document lessons learned and update procedures.

Integrating Compliance into Daily Operations

Effective security programs treat compliance as an ongoing operational activity, not a one‑time project. To integrate the HIPAA Breach Notification Guide into daily operations:

- Perform continuous risk assessments – Add risk analysis into change‑management processes. New applications, vendors, integrations or process changes should trigger updates to the risk register.

- Align cross‑framework controls – Map HIPAA safeguards to SOC 2, ISO 27001 and GDPR controls. For example, HIPAA’s requirement to maintain audit logs aligns with SOC 2 security criteria and ISO 27001 control 5.2 on logging. Encryption and access control requirements map across frameworks, enabling evidence reuse and reducing duplication.

- Automate evidence collection – Use platforms or managed services to automatically capture logs, access reviews, configuration baselines and vulnerability scan results. Konfirmity’s managed service reduces internal workload from ~550–600 hours self-managed to ~75 hours per year by automating evidence collection and operating controls.

- Track vendor risk – Maintain an inventory of all business associates and subcontractors, collect and review their security attestations (SOC 2, ISO 27001, HITRUST, etc.) and enforce incident‑notification clauses. The NPRM would require annual verification of vendor safeguards.

- Regular training and awareness – Provide ongoing education to employees and contractors. Refresh content to address new threats (e.g., social engineering, generative AI phishing) and regulatory changes.

- Update breach response plans – Review and refine incident response procedures after each breach or drill. Incorporate lessons from industry events such as the 2024 Change Healthcare attack that cost over $2 billion.

Conclusion

As cyber‑threats intensify and regulators raise the bar, healthcare companies must prioritise real security over paper promises. The HIPAA Breach Notification Guide is more than a compliance checklist; it is a roadmap for protecting patient trust and accelerating enterprise sales. By understanding the legal definitions of PHI, performing thorough risk assessments, implementing layered safeguards and documenting every step, organisations can reduce breach risk and respond effectively when incidents occur.

Across 6,000+ audits, we have seen that operational readiness—not just documentation—makes the difference. Teams that integrate HIPAA, SOC 2 and ISO 27001 controls, automate evidence collection and engage human‑led security experts cut detection and response times dramatically, decrease the cost of breaches and increase audit success rates. Security that looks good on paper but fails under stress is a liability. Build the program once, operate it daily, and compliance will follow.

FAQs

1. What triggers a HIPAA breach notification?

A breach notification is triggered when unsecured PHI is acquired, accessed, used or disclosed in a manner not permitted by the Privacy Rule and the risk assessment does not demonstrate a low probability that the data was compromised. Lost devices, hacking incidents and misdirected communications are common triggers.

2. How do you decide if PHI is “unsecured”?

PHI is unsecured when it has not been rendered unusable, unreadable or indecipherable to unauthorised persons. Encryption using NIST‑approved algorithms or destruction of media qualifies as securing the data. If PHI on a lost laptop is encrypted and the key is stored separately, the incident may not require notification.

3. Who must be notified in a breach involving fewer than 500 patients?

Covered entities must notify the affected individuals without unreasonable delay and no later than 60 days. They must also log the breach and report it to HHS within 60 days after year‑end. Media notification is not required unless the breach affects more than 500 residents of a state or jurisdiction.

4. What are common mistakes in breach reporting?

Delays in recognising and investigating incidents, incomplete documentation, failing to conduct a risk assessment, assuming that encryption always exempts notification and neglecting to notify business associates are frequent errors. Many enforcement actions cite inadequate risk analysis.

5. Can encryption exempt an incident from notification requirements?

Encryption can exempt an incident only if the data was encrypted at the time of loss or theft and the decryption key was not compromised. HHS considers encrypted data “secured” when it is rendered unusable, unreadable or indecipherable through appropriate algorithms and key management. If encryption keys are exposed or if data was decrypted in memory, notification may still be required.

6. How do state breach laws interact with HIPAA requirements?

State breach laws often impose more stringent timelines or additional reporting obligations. For example, some states require reporting to state attorneys general within 30 days or mandate credit‑monitoring services. HIPAA provides the floor, not the ceiling; organisations must comply with both federal and state laws. Consult legal counsel to reconcile overlapping requirements.

7. What happens after a breach notification is submitted to HHS?

OCR reviews the report, may request additional documentation and can launch an investigation. Investigations typically examine risk analyses, technical safeguards, policies and training records. They often last years; the median investigation duration in recent OCR cases was 57 months. At the end, OCR may close the case with no action, enter into a resolution agreement requiring corrective actions or impose civil monetary penalties.

.svg)

.svg)

.svg)